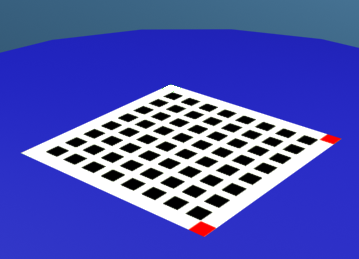

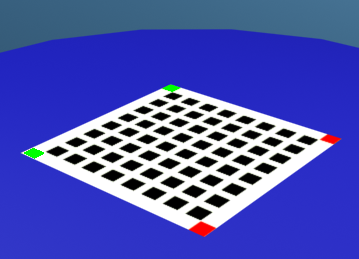

The first step in camera calibration is to find a pattern that works well, given the situation. Initally, I made a custom pattern with 8x8 black squares, and 2 red squares (corner markers) for alignment (for example, to find our bearings when the entire pattern is rotated by 90 degrees). The idea was to get the centers of these 64 black squares and use them for calibration.

To locate the black squares, I used a technique similar to the one I developed for my DIP assignment last semester, where the axis (the line joining the centers of the red boxes) would be swept perpendicular to itself, across the board. Each time the axis is shifted by a small amount, we check how many new boxes have been crossed by the line. If 8 new boxes have been crossed, we mark that as a column.

Calibration pattern

The problem with this technique, as I later found out, is that it does not take into consideration the fact that the entire board has been distorted by a perspective projection. This means that, after a while, the sweeping axis would gather eight boxes, that do not actually belong to the same column. So we may have the first 7 boxes from the first column, and the first box of the next column, while totally missing the last box of the current column.

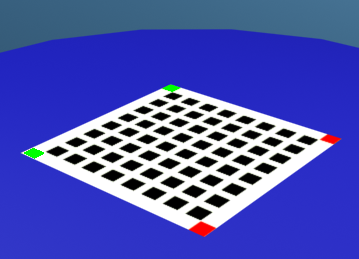

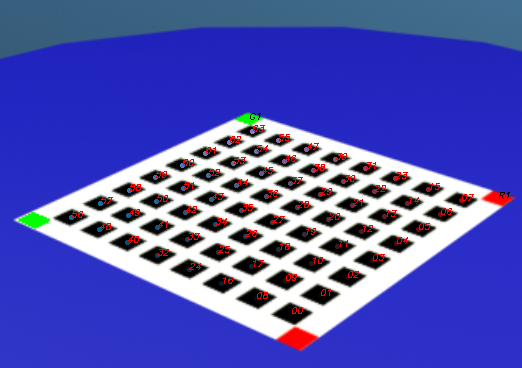

To fix this problem, I added two more corner markers in green color. This ensures that I can locate and uniquely label all four corners of the board. It also means that we can now take into account the perspective distortion. The sweeping axis is now no longer simply translated. Instead, consider two primary axes, one formed by the red boxes, and another formed by the green boxes. The sweeping line is obtained by linearly interpolating between these two axes.

New Calibration pattern for perspective correction

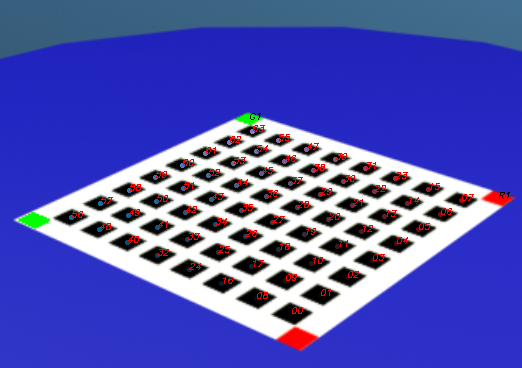

To identify the various features (red, green, black boxes), I generalised the code that I had from my calibration assignment, so that it would work with any color. The caveat is that the adaptive thresholding that I developed works on the grayscale image. But since these images are taken in well lit conditions, that does not really matter. A todo would be to move all the 'magic' constants in the code to a config file so it can be easily modified as and when the lighting conditions change.

Labeled Calibration pattern (click to enlarge)

Numerically stable solution:

To solve the system of equations and get the projection matrix, I used the least squares technique detailed in last semester's course. It involved calculating the pseudo inverse of a matrix X as [X'(X'X)^-1]. Immidiately, I found that this solution did not work at all for my problem. In the DIP course, our input points lie on two different planes. Here, all my inputs were on a single plane (z=constant). This caused the system to blow up and give me either zero or a very large number as the answer each time. I found that I could get correct results if i randomly perturbed the z values by small amounts.

On Rohit's suggestion, I tried out pseudo inverse using SVD. It worked wonderfully! It turns out that the SVD method is more numerically stable than the regular pseudoinverse techique. To make things better, openCV has already implemented a SVD based solver for a system of linear equations (cvSolve). This not only ensured correct results, but vastly simplified the code (openCV matrix code tends to get messy).