There are many commercial tools to back up a live file system. In AFS you can clone to a slave server. In XFS, you can freeze, backup and unfreeze. Any file-system level snapshot will generally require you to first stop any services like relational databases whose consistency depends on the state in multiple files.

Here is a very convenient way to back up partitions that are on Linux software RAID1 (on two partitions sda1 and sdb1, say). Attach an external disk with partition sdc1 exactly the same size and type (Linux RAID autodetect) as sda1 and sdb1. Extend the RAID1 to three disks. Let the logical RAID device synchronize, which goes much faster than a filesystem-level backup. Finally, fail and remove sdc1.

In case of the RAID1 failing, you will probably have to run fsck on sdc1 before mounting it some place, but in my experience this fsck has never failed. And if you can remount the partition read-only while synchronization happens, you are guaranteed a clean backup.

Some specific commands follow.

In reality I use /dev/disk/by-id/... instead of /dev/sdc1 to be safe.

So you read the notes below, and set up software RAID5 /dev/md0 on Linux. One day a disk /dev/sdg fails. So you duly

mdadm /dev/md0 -r /dev/sdg1and replace the disk. While doing this in a hotswap enclosure, the new /dev/sdg replacement bumps against /dev/sdf, failing that too, not because of a real problem with /dev/sdf but a transient contact failure. On reboot, you find /dev/md0 gone. What now?

Relax, most of the time this will do the trick. When booting, drop into a shell when md0 drops dead. Inside the shell,

mdadm --assemble --force /dev/md0 /dev/sd[bcdefhijklm]1(Adjust devices as per your installation, obviously. The above is for a 12-disk RAID5.) Note that /dev/sdg1 is missing.

If this fails, you are toast. Otherwise, rejoice and

# fsck /dev/md0 # badblocks -sw /dev/sdg1 # mdadm --add /dev/md0 /dev/sdg1and allow four to six weeks for a rebuild. Preferably mount read-only until the rebuild is complete.

Before we get started: If you value what you do, RAID (hardware or software) is not an alternative to offsite backups (and regular restore drills).

"Host RAID" cards (e.g. LSI MegaRAID-133-2) that use a proprietary driver and hogs the PC's CPUs are worse than useless. Avoid these like the plague. Luckily, some PATA/SATA hostRAIDs allow the RAID function to be disabled.

Low-end "real hardware" RAID cards (e.g., IBM ServeRAID4Lx) typically use Intel IOPs between 100MHz and 400MHz. Remember, "hardware" RAID is just software running on another processor. When your main CPU was 500MHz, this would be a great assist, but if your main CPU is (2x) 3GHz HT, this is peanuts.

Also, your system RAM has grown from 128MB to 4GB in the last few years, and your "entry level" RAID card is still stuck at 32--128MB. Who can cache better?

"Mid-range" (e.g. IBM/Adaptec MegaRAID6M) or even "high end" RAID cards go up to 600MHz IOPs and 256MB cache at best. This is still small compared to your typical server.

Summary: If you have a reliably hooked up UPS, use software RAID. Linux swraid disk scheduling is already quite sophisticated, probably much smarter than cheap RAID firmware. Speaking of smart, a swraid will also allow smartctl to access the disks directly and produce PFAs that cheap RAID cards cannot.

Another advantage of sw RAID1 is in the worst case you can pretty much recover all the data "by hand" from the surviving disk, whereas RAID cards use proprietary stripe formats with metadata and you cannot mount these partitions directly.

If you are really stuck with a hardware RAID card, or like it because of the transparent MBR failover, never use RAID5, because this actually makes the RAID IOP work. It's best when not used, like virtual memory.

Always use RAID1 or RAID10. Disk is much cheaper than buying SCSI cables and enclosures that let you upgrade to Ultra320 to compensate for the extra parity calculation workload and disk writes of RAID5. If you have more than five disks try not to RAID5 all of these togther, if you care for performance over capacity.

If you are using software RAID1 or RAID10, make sure you have written the bootloader into all MBRs, so that your computer can boot even if the primary boot drive fails. GRUB can do this easily. We use GRUB for DOS. Your BIOS must be smart enough to fail over the boot sequence. Most modern BIOSes are.

|

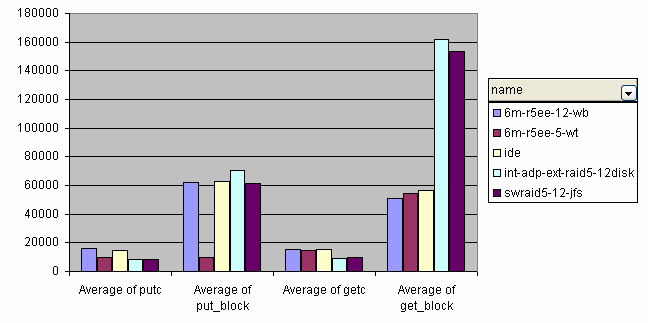

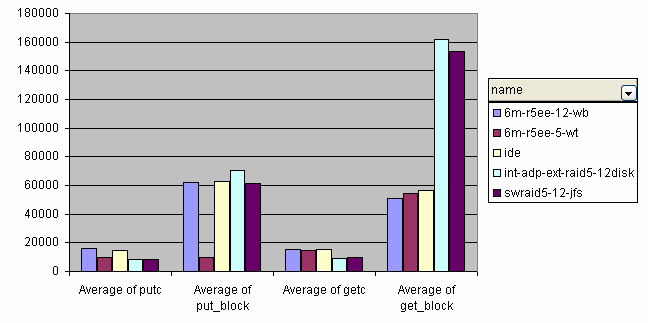

The numbers were very educational for me. A single old PATA133 disk does better than a midrange Ultra320 SCSI RAID card with 10krpm Ultra320 disks in terms of block rewrite, block read, and directory operations exercised by bonnie++, while costing less than 10% of the SCSI solution.

A PATA133 RAID10 suffers essentially no write penalty and gives you 100% redundancy and much better performance at 20% of the cost of the SCSI solution. I have heard claims that SCSI disks last longer but who cares, we will get petabyte RAM implants in our kidneys before either our PATA/SATA or SCSI disks die.

|

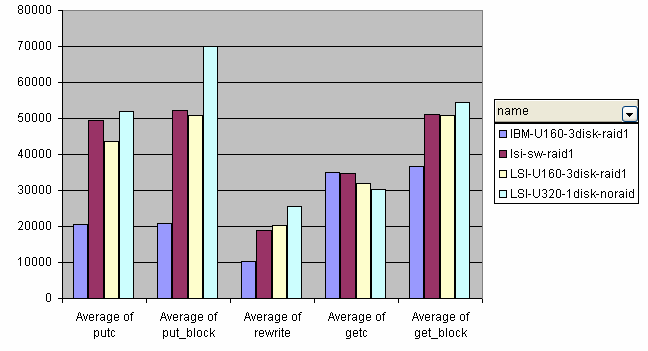

The newer LSI22320RB card supports only RAID1 and is cheaper than the ServeRAIDs, but has a more reliable performance profile. At least hwraid and swraid are very close in performance. Note that the ServeRAID4Lx does not have a battery-backed write-back cache.