| July 24 |

pdf/html |

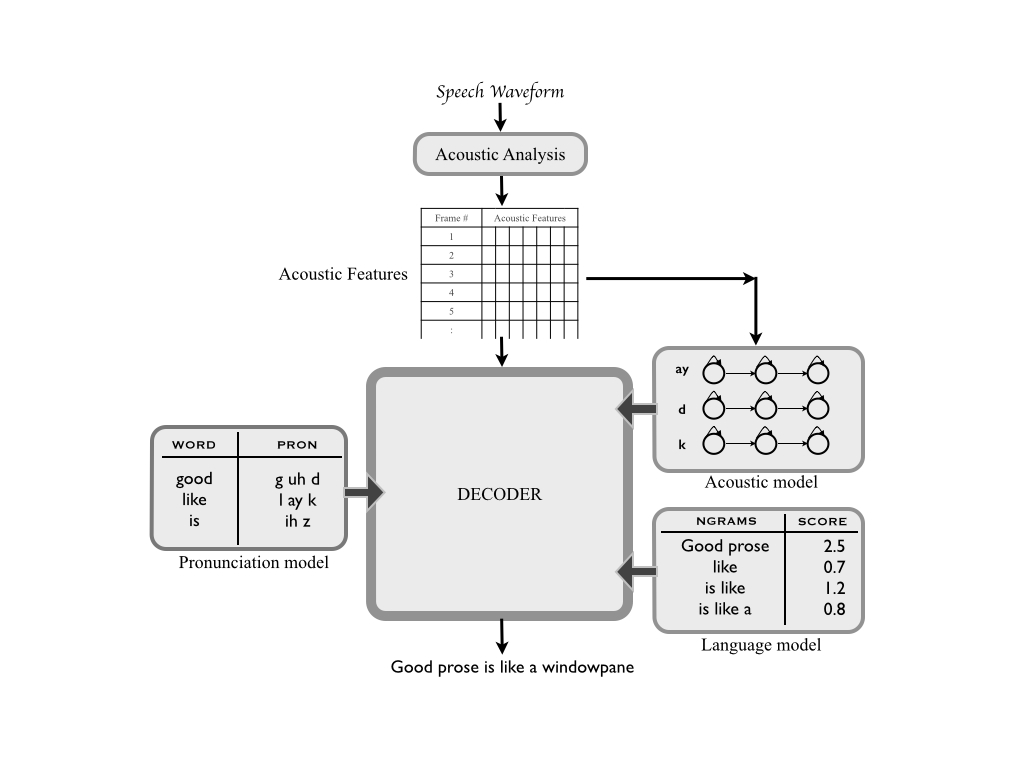

Introduction to Statistical Speech Recognition |

S. Young, Large vocabulary continuous speech recognition: A review, IEEE Signal Processing Magazine, 1996.

If you want a refresher in machine learning basics, go through Part I in the following book: Deep Learning |

| July 27 |

pdf/html |

Introduction to WFSTs and WFST algorithms |

(Read Sections 2.1-2.3 and 3) M. Mohri, F. Pereira, M. Riley, Speech recognition with weighted finite-state transducers, Springer Handbook of Speech Processing, 559-584, 2008.

[Additional reading] M. Mohri, F. Pereira, M. Riley, The Design Principles of a Weighted Finite-State Transducer Library, Theoretical Computer Science, 231(1): 17-32, 2000.

[Additional reading] M. Mohri, Semiring frameworks and algorithms for shortest-distance problems, Journal of Automata, Languages and Combinatorics, 7(3):321-350, 2002.

|

| July 31 |

pdf/html |

WFST algorithms continued |

(Read Sections 2.4 and 2.5) M. Mohri, F. Pereira, M. Riley, Speech recognition with weighted finite-state transducers, Springer Handbook of Speech Processing, 559-584, 2008.

[Additional reading] For pseudocode and more details on determinization/minimization of WFSAs. M. Mohri, Weighted Automata Algorithms, Handbook of weighted automata. Springer Berlin Heidelberg, 2009. 213-254. |

| Aug 3 |

pdf/html |

WFSTs continued + WFSTs in ASR |

(Required reading) M. Mohri, F. Pereira, M. Riley, Weighted Finite-state Transducers in Speech Recognition , Computer Speech and Language, 16(1):69-88, 2002.

|

| Aug 7 |

pdf/html |

Hidden Markov Models (Part I) |

(Read Sections I to V) Lawrence R. Rabiner, A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition, Proceedings of the IEEE, 77(2), 257-286, 1989.

(Required reading) D. Jurafsky, J. H. Martin, "Chapter 9: Hidden Markov Models", Speech and Language Processing, Draft of November 7, 2016.

|

| Aug 10 |

pdf/html |

Hidden Markov Models (Part II) |

(Required reading) Both articles listed against Aug 7.

[Additional reading] A. P. Dempster, N. M. Laird, D. B. Rubin, Maximum Likelihood from Incomplete Data via the EM Algorithm, Journal of the Royal Statistical Society, Vol. 39, 1, 1977.

[Additional reading] J. Bilmes, A gentle tutorial of the EM algorithm and its application to parameter estimation for Gaussian mixture and hidden Markov models., International Computer Science Institute 4.510, 1998.

|

| Aug 14 |

pdf/html |

Hidden Markov Models (Part III) |

(Required reading) Both articles listed against Aug 7.

(Required reading) S. J. Young, J. J. Odell, P. C. Woodland, Tree-Based state tying for high accuracy acoustic modelling, Proc. of the workshop of HLT, ACL, 1994.

(Useful reading) J. Zhao, X. Zhang, A. Ganapathiraju, N. Deshmukh, and J. Picone, Tutorial for Decision Tree-Based State Tying For Acoustic Modeling, 1999.

|

| Aug 17 |

pdf/html |

DNNs in ASR |

(Required reading) G. Hinton, L. Deng, D. Yu, G. E. Dahl, A. Mohamed, N. Jaitly, A. Senior, V. Vanhoucke, P. Nguyen, T. N. Sainath, and B. Kingsbury, Deep Neural Networks for Acoustic Modeling in Speech Recognition , IEEE Signal Processing Magazine, 29(6):82-97, 2012.

(Useful reading, chapters 1 and 2)

Michael Nielsen, Neural Networks and Deep Learning, Jan 2017.

(Useful reading) N. Morgan and H. A. Bourlard An Introduction to Hybrid HMM/Connectionist Continuous Speech Recognition, 1995.

(Useful reading) H. Hermansky, D. Ellis, and S. Sharma, Tandem Connectionist Feature Extraction for Conventional HMM Systems, Proceedings of ICASSP, 2000.

|

| Aug 21 |

pdf/html |

RNN-based models in ASR |

(Required reading) A. Graves, N. Jaitly, Towards End-to-end Speech Recognition with Recurrent Neural Networks, Proceedings of ICML, 2014.

(Useful reading) Z. Lipton, J. Berkowitz, C. Elkan, A critical review of recurrent neural networks for sequence learning, arXiv preprint arXiv:1506.00019, 2015.

|

| Aug 24 |

pdf/html |

Acoustic Feature Extraction for ASR + Basics of speech production |

(Required reading) D. Jurafsky, J. H. Martin, Speech and Language Processing, 1st edition, Section 9.3 Feature extraction: MFCC vectors. (Shared via Moodle.)

[Additional reading] D. Jurafsky, J. H. Martin, "Chapter 7: Phonetics", Speech and Language Processing (2nd edition), 2008.

|

| Aug 28 |

pdf/html |

Language Modeling (Part I) |

(Required reading) D. Jurafsky, J. H. Martin, "Chapter 4: Language Modeling with N-grams", Speech and Language Processing, Draft of November 7, 2016.

[Additional reading] C. Shannon, Prediction and entropy of printed English, 1950.

|

| Aug 31 |

pdf/html |

Language Modeling (Part II) |

(Required reading) D. Jurafsky, J. H. Martin, "Chapter 4: Language Modeling with N-grams", Speech and Language Processing, Draft of November 7, 2016.

(Required reading)S. F. Chen, J. Goodman, An empirical study of smoothing techniques for language modeling, Computer Speech and Language, 13, pp. 359-394, 1999.

|

| Sep 7 |

pdf/html |

Mid-semester revision lecture |

-

|

| Sep 11, 14 |

- |

Midsem week |

-

|

| Sep 18 |

pdf/html |

Language modeling (Part III) |

(Required reading) H.Schwenk, Continuous space language models, Computer Speech and Language, 21(3), 492-518, 2007.

(Required reading) T. Mikolov et al. Recurrent neural network language model, Proc. of Interspeech, 2010.

|

| Sep 21 |

pdf/html |

Search and decoding (Part I) |

(Required reading) D. Jurafsky, J. H. Martin, Speech and Language Processing, 1st edition, Chapter 10. (Shared via Moodle.)

|

| Sep 25 |

pdf/html |

Search and decoding (Part II) |

[Additional reading] L. Mangu, E. Brill and A. Stolcke Finding consensus in speech recognition: word error minimization and other applications of confusion networks, Computer Speech and Language, 14:4, 373-400, 2000.

|

| Sep 28 |

pdf/html |

Discriminative Training |

(Required reading, Sections 1,2,3.2,5) K. Vertanen, An overview of Discriminative Training for Speech Recognition

|

| Oct 5 |

quiz3 |

Quiz 3 |

Quiz3 was handed out in class and solutions discussed |

| Oct 9 |

pdf/html |

Advanced Neural Models for ASR |

(Useful reading) A. Graves and N. Jaitley, Towards End-to-end Speech Recognition with Recurrent Neural Networks, NIPS, 2014.

(Useful reading)A. Maas, Z. Xie, D. Jurafksy, A. Ng Lexicon-Free Conversational Speech Recognition with Neural Networks, NAACL, 2015.

|

| Oct 12 |

pdf/html |

Advanced Neural Models for ASR |

(Useful reading)Papers mentioned within the slides.

|

| Oct 16 |

pdf/html |

Pronunciation Modeling |

(Useful reading)Papers mentioned within the slides.

|

| Oct 23 |

pdf/html |

Speaker Adaptation |

(Useful reading)Papers mentioned within the slides.

|

| Oct 26 |

pdf/html |

Conversational Agents |

(Required reading) D. Jurafsky, J. H. Martin, "Chapter 29: Dialog Systems and Chatbots", Speech and Language Processing, Draft of August 28, 2017.

(Required reading) D. Jurafsky, J. H. Martin, "Chapter 30: Advanced Dialog Systems", Speech and Language Processing, Draft of August 28, 2017.

|

| Oct 30 |

pdf/html |

Quiz 4 + Intro to speech synthesis |

Quiz 4 was handed out in class and solutions discussed. Speech synthesis was briefly introduced. |

| Nov 2 |

pdf/html |

Statistical parametric speech synthesis (Part I) |

(Required reading) H. Zen, K. Tokuda, A. W. Black, Statistical Parametric Speech Synthesis,Speech Communiation, 2009.

|

| Nov 6 |

pdf/html |

Statistical parametric speech synthesis (Part II) |

(Useful reading)Z-H Ling, et al. Deep Learning for Acoustic Modeling in Parametric Speech Generation,IEEE Signal Processing Magazine, 2015.

|