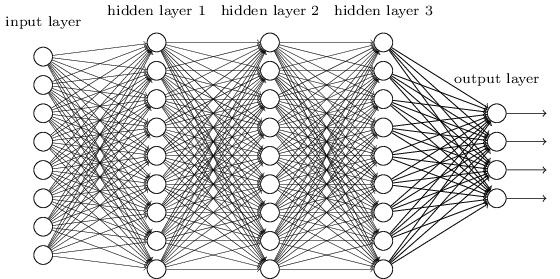

We continue with the same task we investigated in Lab Assignment 7: designing classifiers for the OCR of scanned handwritten digits. In this assignment, you will design a feed-forward neural network (also popularly known as a Multilayer Perceptron) classifier for the task. Feed-forward neural networks form the basis for modern Deep Learning models. However, the networks with which you will experiment in this assignment will still be relatively small compared to some of modern instances of neural networks.

An artificial neural network with 3 hidden layers

(Image source: http://neuralnetworksanddeeplearning.com/images/tikz41.png.)

To test your code, you are provided a set of toy examples (1 through 4 below), each a 2-dimensional, 2-class problem. You are also provided tools to visualise the performance of the neural networks you will train on these problems. The plots show the true separating boundary in blue. Each data set has a total of 10,000 examples, divided into a training set of 8000 examples, validation set of 1000 examples and test set of 1000 examples.

Data set 5 is the MNIST data set with which you experimented in Lab Assignment 7, although this time we have included more points from the original data set.

The input X is a list of 2-dimensional vectors. Every example Xi is represented by a 2-dimensional vector [x,y]. The output yi corresponding to the ith example is either 0 or 1. The labels can be linearly separated by a straight line as shown in Figure 1.

The input X is a list of 2-dimensional vectors. Every example Xi is represented by a 2-dimensional vector [x,y]. The output yi corresponding to the ith example is either 0 or 1. The labels follow XOR-like distribution. That is, the first and third quadrant has same label (yi = 1) and the second and fourth quadrant has same label (yi = 0) as shown in Figure 2.

The input X is a list of 2-dimensional vectors. Every example Xi is represented by a 2-dimensional vector [x,y]. The output yi corresponding to the ith example is either 0 or 1. Each set of label (yi = 1 and yi = 0 ) is arranged approximately on the periphery of a circle (with some spread W) of unknown radius R as shown in Figure 3.

The input X is a list of 2-dimensional vectors. Every example Xi is represented by a 2-dimensional vector [x,y]. The output yi corresponding to the ith example is either 0 or 1. Each set of label (yi = 1 and yi = 0 ) is arranged approximately on the periphery of a semi circle of unknown radius R with some spread W as shown in Figure 4.

We continue to use

the MNIST data set

which contains a collection of handwritten numerical digits (0-9) as

28x28-sized binary images. Therefore, input X is represented as vector of size 784.

These images have been size-normalized and centered in a fixed-size image.

For the previous assignment, we

used only 5000 images for training the Perceptron. Since a neural

network has many neurons that need to be trained (and consequently,

many tunable parameters), it typically needs a lot more data to be

trained successfully. MNIST provides a total 70,000 examples, divided

into a test set of 10,000 images and a training set of 60,000

images. In this assignment, we will carve out a validation set of

10,000 images from the MNIST training set, and use the remaining

50,000 examples for training.

In this assignment, we will code a feed-forward neural network from scratch using python3 and the numpy library.

The base code for this assignment is available

in this compressed file. Below is the list of files

present in the lab08-base directory.

| File Name | Description |

nn.py |

This file contains base code for the neural network implementation. |

test_feedforward.py |

This file contains code to test your feedforward() implementation. |

visualize.py |

This file contains the code for visualisation of the neural network's predictions on toy data sets. |

visualizeTruth.py |

This file contains the code to visualise actual data distribution of toy data sets. |

tasks.py |

This files contains base code for the tasks that you need to implement. | test.py |

This file contains base code for testing your neural network on different data sets. |

util.py |

This file contains some of the methods used by above code files. |

All data sets are provided in the datasets directory.

We start with building the neural network framework. The base code for the same is provided in nn.py .

The base code consists of the NeuralNetwork class which has the following methods.

__init__ : Constructor method of the NeuralNetwork class. The various parameters are initialised and set using this method. The description of various parameters can be seen in file nn.py. NeuralNetwork consists of the parameters self.weights and self.biases which are initialised using a normal distribution with mean 0 and standard deviation 1. self.weights, is a list of matrices (numpy arrays) correspoding to the weights in various layers of the network.self.biases, is a corresponding list of biases for each layer.train: This method is used to train the neural network on a training data set. Optionally, you can provide the validation data set as well, to compute validation accuracy while training. Further description on various input parameters to the method are available in the base code.computeLoss: This method takes as input the neural network activations of the final layer (predictions) activations and the actual labels Y and computes the squared error loss for the same. computeAccuracy: This method takes as input the predicted labels in one hot encoded form,predLabels, and the actual labels Y and computes the accuracy of the prediction. The four toy data sets will are require neurals nets with two outputs, and the MNIST neural net will have 10 outputs. In each case the output with the highest activation will provide the label.validate: This method takes as input the validation data set, validX and validY , and computes the validation set accuracy using the currently trained neural network model. You will only have to write code inside the following functions.

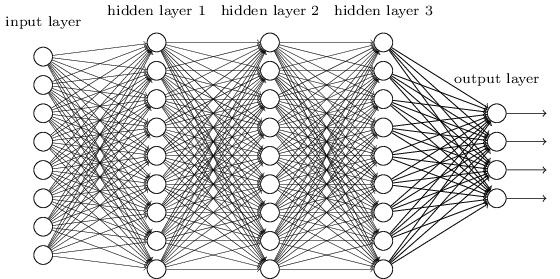

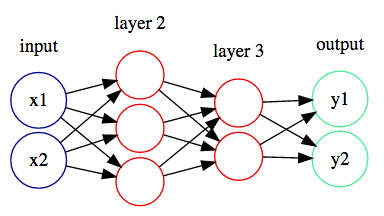

feedforward: This method takes as input the training input data X , and returns the activations at the various layers of the neural network, starting from the first hidden layer (second layer of the neural network) to the output layer. The activations must be returned as list of numpy arrays. For example, if we have a neural network with inputSize 5, outputSize 2, numHiddenLayers 2, hiddenLayerSizes [4, 3]. The activations would be returned as a lists of lists with inner lists of sizes 5, 4, 3 and 2 respectively. backpropagate: This method takes as input the activations returned by the feedforward method and the true output labels Y and it updates the weights self.weights, and biases self.biases, of the neural network instance. In this task, you will complete the feedforward method in nn.py file. This method takes as input the training input data X , and returns the activations at each layer (starting from the first layer(input layer)) to

the output layer of the network as a list of numpy arrays. The activations must be returned as list of lists.

inputSize 2, outputSize 2, numHiddenLayers 2, hiddenLayerSizes [3, 2]. The activations would be returned as a lists of numpy arrays with inner arrays of sizes 2, 3, 2 and 2 respectively.

To test your feed forward network implementation, run the following code.

python3 test_feedforward.py

In this task, you are required to complete the backpropagate() method in nn.py file. This method takes as input the activations returned by the feedforward method and the true output labels Y and it updates the weights, self.weights , and biases, self.biases , of the neural network instance.

You should use matrix form of the backpropagation algorithm for quicker and efficient computation.

For calulating the dot product of two numpy arrays, refer numpy documentation.

For example, if you want to compute the dot product C between two matrices, say A of size 4x3 and B of size 3x5, use the following code:

C=np.dot(A, B) C would have the shape 4x5.

For calulating the transpose of a numpy array, refer numpy documentation.

For example, to compute the transpose AT of a matrix A of size 3x5, use the follwing

AT=np.tranpose(A)

To perform element wise product between two vectors or matrices of the same dimensions, simply use * operator.

For example to compute element wise product between A and B having the same dimension, simply use A*B .

Your backpropagation code would be tested on toy data sets(tasks 3-6) and 6 Marks would be awarded if you get accuracies over 90% on each of the toy data sets.

Complete taskLinear() in tasks.py.

To test your backpropogation code on this data set, run the following command

python3 test.py 1 seedValue python3 visualizeTruth.py 1

Complete taskSquare() in tasks.py.

To test your backpropogation code on this data set, run the following command

python3 test.py 2 seedValue python3 visualizeTruth.py 2

Complete taskCircle() in tasks.py.

To test your backpropogation code on this data set, run the following command

python3 test.py 3 seedValue python3 visualizeTruth.py 3

Complete taskSemiCircle() in tasks.py.

To test your backpropogation code on this data set, run the following command

python3 test.py 4 seedValue python3 visualizeTruth.py 4

In this task, you are required to report the optimal parameter(learning rate, number of hidden layers, number of nodes in each hidden layer, batchsize and number of epochs)of Neural Network for each of the above 4 tasks. You must report the minimal topology of Neural Network, ie, minimal number of nodes and minimal number of hidden layers for each task. For example, in case of linearly separable data set, you should be able to get more than 90% accuracy with just one hidden node.

Write your observations in observations.txt. Also, explain the observations.

The MNIST data set is given as mnist.pkl.gz file.

The data is read using the readMNIST() method implemented in util.py file.

Your are required to instantiate a NeuralNetwork class object and train the neural network using the training data from MNIST data set. You are required to choose appropriate hyper parameters for the training of the neural network. To obtain full marks, your network should be able to achieve a test accuracy of 90% or more across many different random seeds. You will get 2 marks if your test accuracy is below 90%, but stil exceeds 85%. Otherwise, you will get 1 mark if your test accuracy is at least 75%.

The code can be run using

python3 test.py 5 seedVal seedVal is a suitable integer value to initialise the seed for random-generator.

You are required to compress the lab08-base directory with your

updated code for files nn.py , tasks.py

and observations.txt . In nn.py, you will have to fill out feedforward() and backpropagate(). In tasks.py, you will complete Tasks 2.1, 2.2, 2.3,

2.4, and 3 by editing corresponding snippets of code. In observations.txt, you will report minimal topologies on the toy problems, as a part of Task 2.5, and explain your observations.

Place these three files in a directory, and compress it to be rollno_lab08.tar.gz and upload

it on Moodle.