CS 747: Programming Assignment 3

(TA in charge: Pragy Agarwal)

In this assignment, you will implement and compare different

algorithms for reinforcement learning. Your agent will be placed in an

environment, and will try to reach a goal state by taking actions. Its

objective is to maximise expected cumulative reward. The algorithms

you will implement are Sarsa($\lambda$) (with accumulating or

replacing traces) and Q-learning.

Environment

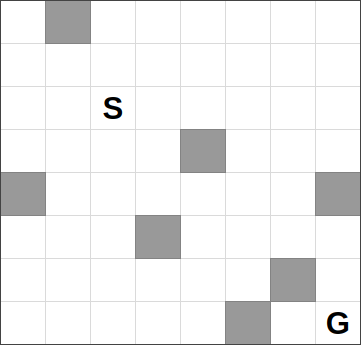

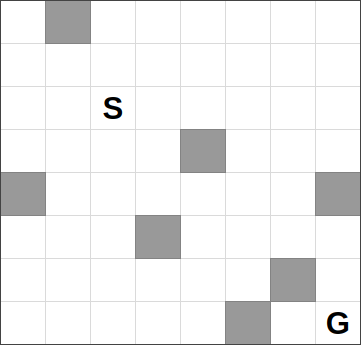

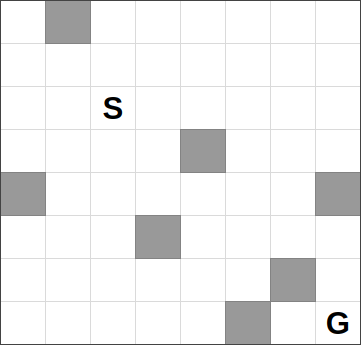

The environment, which can be modeled by an MDP, is a grid world with a fixed start state and a fixed goal state (see figure). Some states in this world are obstacles, and thus unreachable. At the beginning of each episode, the agent is placed at the start state. The agent must move to the goal by taking the actions: up, down, left, and right. An episode is terminated if the agent reaches the goal, or if the episode reaches a certain length.

- If the agent tries to move into an obstacle, it stays put.

- The environment is slippery, i.e. the agent will move in a random direction with some small probability, irrespective of the action taken.

- Reaching the goal gives a reward of $(+100)$.

- Reaching any other state gives a reward of $(-1)$.

Note:

Note:

- Your algorithms must work for an arbitrary-sized grid. In your experiments, however, you will fix the grid to be of size $32 \times 32$.

- The states are numbered $0$ to $N-1$, where $N$ is the total number of states. However, the numbers assigned to the states are permuted, which means cells $i$ and $i + 1$ need not be neighbours on the grid. The permutation function can be turned off for debugging, but will be used while testing your agent.

- A random grid world can be generated by using the

--instance parameter for the server. You are required to run your experiments on the gridworld instances 0 and 1.

Code

You will find two directories in this code base.

server

The server directory comprises the code to simulate

the MDP, in other words the "environment". The server waits for a

client ("agent") to connect with it and start taking actions in

sequence. Initially, by requesting "info" from the server, the client

can get to know the number of states in the task, as well as the

current state of the agent. Thereafter, for each action, the server

generates a reward and a next state based on the current state and the

chosen action, which is communicated back to the agent. Agent-server

communication happens through a TCP connection. The server is coded in

Python3. It can be started by calling python3 server.py

with the appropriate parameters.

After a complete run, the server prints (to stdout) the per episode reward obtained by the client agent, along with the number of episodes completed.

|

Parameters to server.py |

| --ip |

| --port |

| --side | Side length of the square grid |

| --instance | Instance number of the gridworld. The instance number fixes the start state, goal, and obstacles |

| --maxlength | Maximum number of time steps in an episode. Set it to 1000 for your experiments |

| --randomseed | Seed for RNG. This determines the permutation for state labels, and the actual environment dynamics |

| --numepisodes | Number of episodes to run |

| --slip | How likely is it for the agent to slip. Fixed at 0.02 for evaluation. Can be set to 0 for debugging |

| --noobfuscate | Turns off obfuscation (for debugging only) |

| --quiet | Suppresses detailed output. (Will make the code run a little faster) |

|

client

The client directory is provided to you as an example of what your submission must achieve. The client is the agent that implements learning algorithms. client.py handles the networking. The agent provided to you in agent.py merely samples the actions in a round-robin fashion: you will have to implement more efficient learning algorithms.

|

Parameters to client.py |

| --ip |

| --port |

| --algorithm | The learning algorithm to be used. {random, sarsa, qlearning} |

| --gamma | Discount Factor |

| --lambda | This provides the value for $\lambda$ for the Sarsa algorithm |

| --randomseed | Seed for RNG. Your agent must use this seed |

|

Assignment

- You must implement the Sarsa($\lambda$) and Q-learning algorithms.

Note: You must tune/anneal the learning and exploration rates to achieve the best performance.

- For Sarsa, you must implement either the replacing-trace method, or the accumulating-trace method, whichever is better according to your experiments.

- You must produce a report (as

report.pdf), containing the following elements.

- A graph each for MDP instances 0 and 1 of the expected cumulative reward (y axis) against episode number (x axis) for Q-learning, as well as Sarsa($\lambda$) for an optimised value of $\lambda$. Extend the x axis up to a point the graphs have stabilised.

- A graph each for MDP instances 0 and 1 of the expected cumulative reward over the first 500 episodes of training (y axis) for Sarsa($\lambda$), as $\lambda$ is varied (x axis).

- Your interpretation of the results (why are they as observed? what is interesting/unexpected in the results?).

Note: Plot both Q-learning and Sarsa results on the same graph (so you have four plots in total). Test and plot for at least 10 different values of $\lambda$ (need not be equally spaced). Your instances must be for a grid of size $32 \times 32$ (i.e. --side 32). Since the experiments are stochastic, average the values over 50 runs before plotting them. Mention the value of $\lambda$ that you found by tuning (for both instances) in your report.

References:

Submission

You will submit two items: (1) working code for your agent, and (2) the report as described.

Create a directory titled [rollnumber]. Place all your source and executable files, along with the report.pdf in this directory. The directory must contain a script named startclient.sh, which must take in all the command line arguments that are accepted by the example client provided. You are free to build upon the provided Python agent, or otherwise implement an agent in any programming language of your choice. The hostname and port number should suffice for setting up a TCP connection with the server.

Your code will be tested by running an experiment that calls startclient.sh in your directory. Before you submit, make sure you can successfully run startexperiment.sh on the departmental machines (sl2-*.cse.iitb.ac.in).

Provide references to any libraries and code snippets you have utilised (mention them in report.pdf). It is okay to use public code for parts of your agent such as the network communication module, or, say, libraries for random number generation and sorting. However, the logic used for sampling the actions must entirely be code that you have written.

Compress and submit your directory as [rollnumber].tar.gz. The directory must contain all the sources, executables, and experimental data, and importantly, your startclient.sh script and report.pdf file. Make sure you upload your submission to Moodle by the submission deadline.

Evaluation

Your algorithms will be tested on different MDP instances to verify that they perform as expected. 6 marks are allotted for the correctness of your algorithms (4 marks for Sarsa($\lambda$) and 2 marks for Q-learning), and 4 marks for your report. You will not get the full 4 marks for the report if your algorithms have not been implemented correctly.

The TAs and instructor may look at your source code and notes to corroborate the results obtained by your agent, and may also call you to a face-to-face session to explain your code.

Deadline and Rules

Your submission is due by 11.55 p.m., Tuesday, October 10. You are advised to finish working on your submission well in advance, keeping enough time to test it on the sl2 machines and upload to Moodle. Your submission will not be evaluated (and will be given a score of zero) if it is not received by the deadline.

You must work alone on this assignment. Do not share any code (whether yours or code you have found on the Internet) with your classmates. Do not discuss the design of your agent with anybody else.

You will not be allowed to alter your code in any way after the submission deadline. Before submission, make sure that it runs for a variety of experimental conditions on the sl2 machines. If your code requires any special libraries to run, it is your responsibility to get those libraries working on the sl2 machines (go through the CSE bug tracking system to make a request to the system administrators).