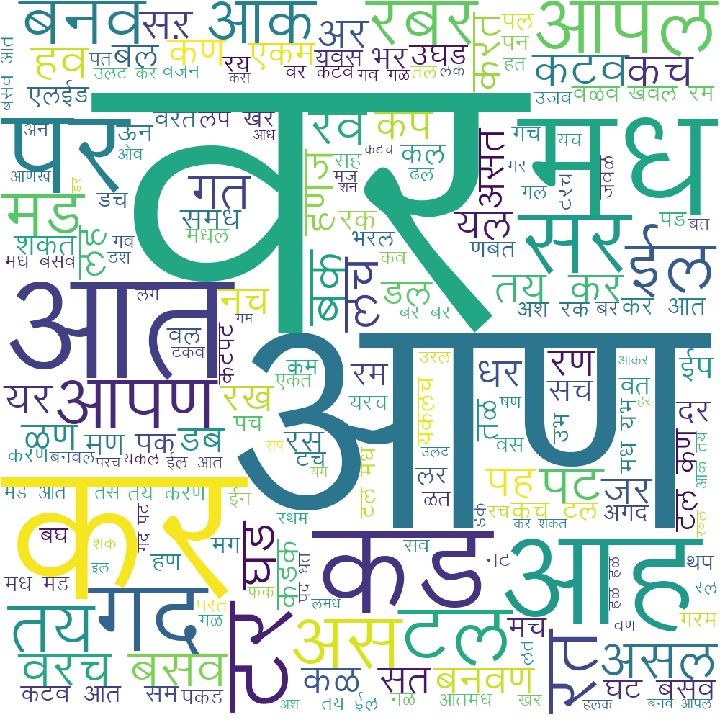

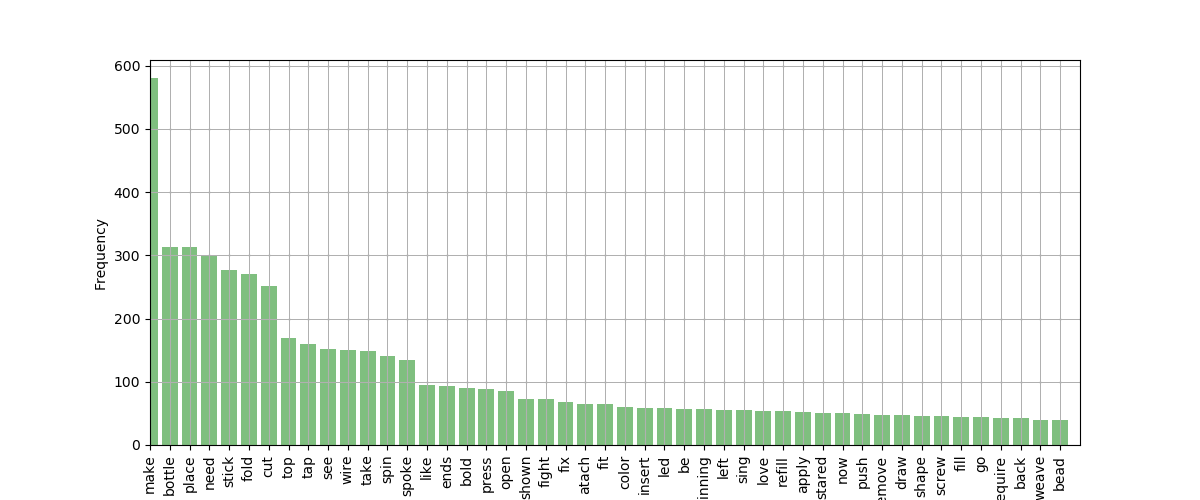

Data

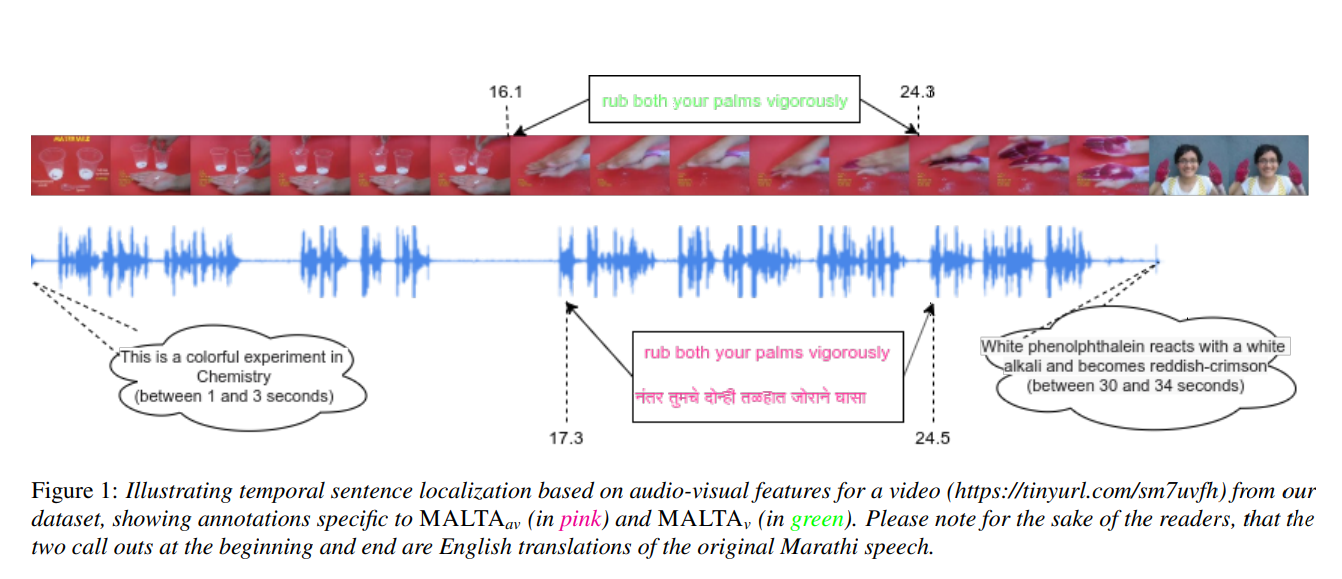

Understanding videos via captioning has gained a lot of traction recently. While captions are provided alongside videos, the information about where a caption aligns within a video is missing, which could be particularly useful for indexing and retrieval..

Characteristics

CLARIFICATION

In MALTA_V, english transcripts are aligned by attending to video alone. While in MALTA_AV, marathi transcripts are aligned by attending to video and audio also.