In this section, We demonstrate the efficiency and applicability of our model through the retrieval applications such as detecting logical turning points, suggest a video, searching candidates for remix generation and video classification. Using these applications, we illustrate, how to make use of videoon model to solve the problem with ease and design faster application.

The applications designed on this model is fast, as it uses the pre-computed information. Typically these applications takes few minutes to compute the required information. This illustrates the efficiency and applicability of our model.

Logical Turning Point Detection

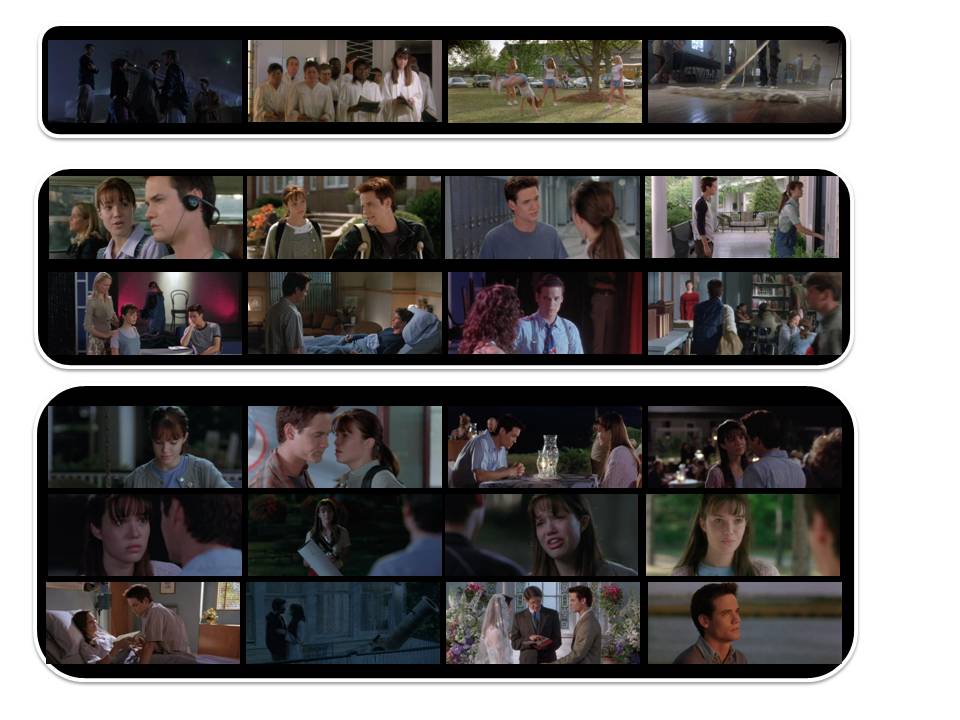

Logical Turning Point in the video is a point where the characteristics of objects change drastically. Detecting such places helps in many retrieval applications which works within a logical unit. This also helps in video segmentation. An example for logical turning point is presented in the Figure below. As illustrated in the figure, the characteristics of people occurring in each story is different from others.

We operate at shot level to handle this problem. We look for the semantics overlap of objects and frames, i.e., pixon and framons, between the shots. When the amount of overlap between shots is low in a specific interval, we detect that as a logical turning point.

Advantages

-

There are applications which is valid only inside one logical unit. These

applications does not work if applied across the stories. Detecting story

points are critical for such applications. Examples of such applications

are

- Identifying key players in sports. In sports, when applied on overall video will detect all rounder. But works well when applied to individual stories and then combine the result.

- Home video enhancer. An application that learns the setting of the environment (indoor outdoor..) and enhances the video accordingly, would work best if applied to individual stories. When presented with video mixed with indoor and outdoor sequence together, this would get confused. Applying at shot or scene level will leave adjacent shot/scene inconsistent.

- learning semantics of video is meaningful and easier if learned for each story rather than across stories

- Finding similarity gives a good picture when done at story level; The measure becomes bit noisier if done at lower levels. When done across stories, the similarity measure will be restricted to the match of sequence of stories. Whereas matching at story levels is a good enough to have good similarity in retrieval.

-

Applications which need boundaries for processing

- A good video preview capturing the story line can be captured only when the logical boundaries are known

- Clustering of video in a video data store done at story level to facilitates a better retrieval

- This method lets us break down video into top-level semantic unit.

As the logical turning points capture the important events in video, the shots around the turning points can be used in preparing storyline. Moreover in each story few shots of the top frequency shotons are also included to provide smooth transition between stories. This ensure that the essential information is added in the summary.

Suggest a Video

When users watches a video from video store, they typically collect information from various sources to judge if they would like the video or not. Alternatively if video of their interest is presented to the users based on their history, it will leave user with a good experience and benefit video store as well.

In this work, we propose a method to automatically suggest videos based on the users interest. Our method uses story similarity between the videos to suggest new videos. This is illustrated in the Figure below.

There are many ways to compare videos, like comparing the feature vectors of the dictionaries, computing the amount of overlapping dictionary units, computing correlation. Dictionary unit similarity is a very useful measure, as it captures the sequence of the events. In our experiment, we use cross correlation of framon representation to compute the similarity between the videos. The video matching with highest correlation value is suggested.

Remix Candidate Search

Remix is the process of generating new video from the existing videos by either changing audio or video. Remixes entertains audience as it is a different version of the popular video. Moreover, remixes are cost effective way of generating entertainment video, as the existing resources are used.

At present, Remixes are generated by manually selecting remix candidates which are similar and generating remix. However this process is time consuming and is limited to the knowledge of person who is selecting the candidates. Moreover the generated remixes are not always pleasant.

In this paper, we present a novel approach for finding remix candidates automatically. Our approach uses object motion synchronization to achieve this. The Videos generated by our approach are tagged as pleasant ones by the end user.

In our method, we choose videos which have high cross correlation of framons, and exchange their audio. As both motion and object of both the videos are highly correlated, this results in good remix.

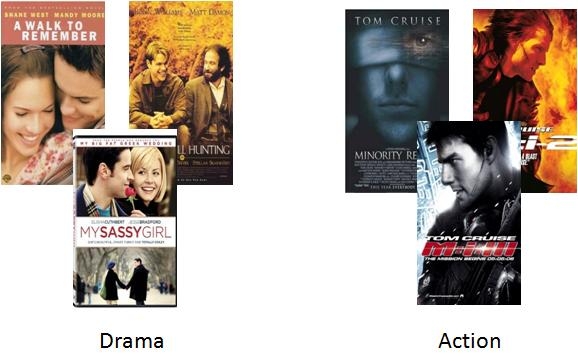

Video Classification

Video classification identifies the category of the video. In our approach we take the percentage of framon overlap to determine the category of the video. For classifying videos, we take few movies from each category as input and choose movies with have overall high matching scores. When there is a conflict in category, classified movies from those categories vote for the conflicting movie. Based on the vote the final category is decided.