Abstract

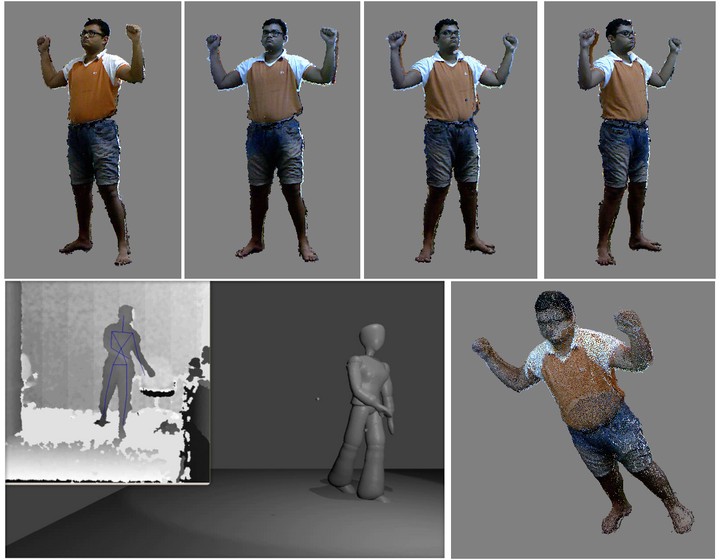

We present a method to create virtual character models of real users from noisy depth data. We use a combination of four depth sensors to capture a point cloud model of the person. Direct meshing of this data often creates meshes with topology that is unsuitable for proper character animation. We develop our mesh model by fitting a single template mesh to the point cloud in a two-stage process. The first stage fitting involves piecewise smooth deformation of the mesh, whereas the second stage does a finer fit using an iterative Laplacian framework. We complete the model by adding properly aligned and blended textures to the final mesh and show that it can be easily animated using motion data from a single depth camera. Our process maintains the topology of the original mesh and the proportions of the final mesh match the proportions of the actual user, thus validating the accuracy of the process. Other than the depth sensor, the process does not require any specialized hardware for creating the mesh. It is efficient, robust and is mostly automatic.