CS 747: Foundations of Intelligent and Learning Agents

(Autumn 2016)

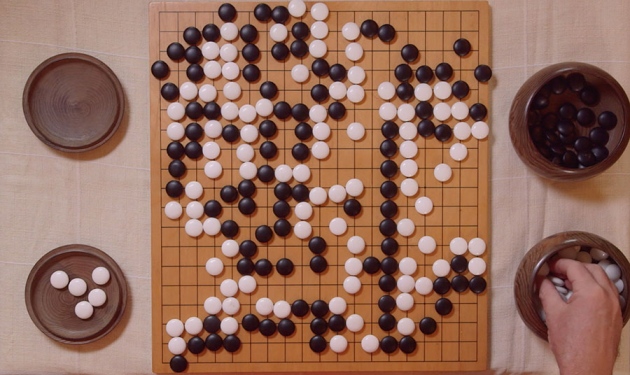

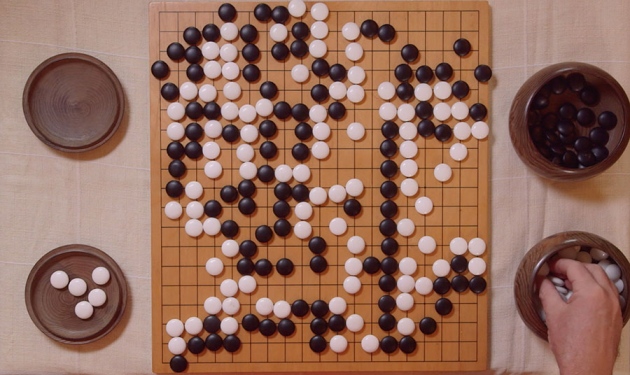

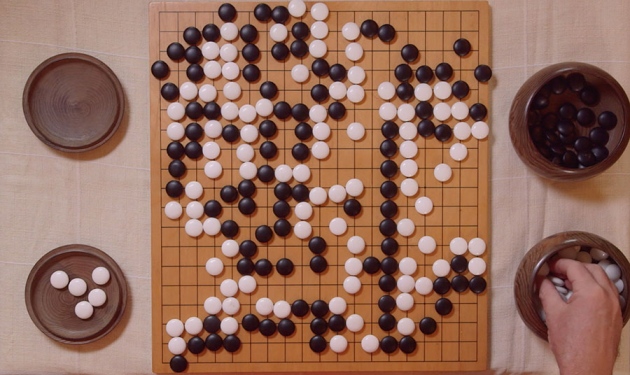

(Picture source: http://www.nature.com/polopoly_fs/7.33483.1453824868!/image/WEB_Go---1.jpg_gen/derivatives/landscape_630/WEB_Go---1.jpg.)

Instructor

Shivaram Kalyanakrishnan

Office: Room 220, New CSE Building

Phone: 7704

E-mail: shivaram@cse.iitb.ac.in

Teaching Assistants

Samiran Roy

Office: 402, New CSE Building, Desk 39

E-mail: samiranroy@cse.iitb.ac.in

A. Siddharth

Office: 402, New CSE Building, Desk 40

E-mail: siddarth@cse.iitb.ac.in

Ashish Ramteke

Office: SynerG Lab, KReSIT Building, Desk B-2

E-mail: ashishr@cse.iitb.ac.in

Class

Lectures will be held in F. C. Kohli Auditorium, KReSIT Building,

during Slot 6: 11.05 a.m. – 12.30 p.m. Wednesdays and Fridays.

Office hours will immediately follow class and be up to 1.15

p.m. on Wednesdays and Fridays. Meetings can also be arranged by

appointment.

Course Description

Today's computing systems are becoming increasingly adaptive and

autonomous: they are akin to intelligent, decision-making

``agents''. With its roots in artificial intelligence and machine

learning, this course covers the foundational principles of designing

such agents. Topics covered include: (1) agency, intelligence, and

learning; (2) exploration and multi-armed bandits; (3) Markov Decision

Problems and planning; (4) reinforcement learning; (5) search; (6)

multi-agent systems and multi-agent learning; and (7) case

studies.

The course will adopt a ``hands-on'' approach, with programming

assignments designed to highlight the relationship between theory and

practice. Case studies, as well as invited talks from experts, will

offer an ``end-to-end'' view of deployed agents. It is hoped that

students can apply the learnings from this course to the benefit of

their respective pursuits in various areas of computer science and

related fields.

Prerequisites

The course is open to all Ph.D. students, all masters students, and

undergraduate/dual-degree students in their fourth (or higher) year of

study.

The course does not formally have other courses as

prerequisites. However, class lectures and assignments will assume

that the student is comfortable with probability and algorithms. The

course has an intensive programming component: based on ideas

discussed in class, the student must be able to independently design,

implement, and evaluate programs in a language of his/her choice. The

student must be prepared to spend a significant amount of time on the

programming component of the course.

Evaluation

Grades will be decided based on four programming assignments, each

worth 10 marks; a programming project worth 20 marks; a mid-semester

examination worth 15 marks; and an end-semester examination worth 25

marks.

The programming assignments and project must be turned in through Moodle.

Students auditing the course must score 50 or more marks in the

course to be awarded an ``AU'' grade.

Academic Honesty

Students are expected to adhere to the highest standards of

integrity and academic honesty. Acts such as copying in the

examinations and sharing code for the programming assignments will be

dealt with strictly, in accordance with the

institute's procedures

and disciplinary

actions for academic malpractice.

Texts and References

Reinforcement Learning: An Introduction, Richard S. Sutton and

Andrew G. Barto, MIT Press,

1998. On-line

version.

Artificial Intelligence: A Modern Approach, Stuart J. Russell and

Peter Norvig, 3rd edition, Prentice-Hall, 2009.

Algorithms for Reinforcement Learning, Csaba Szepesvári,

Morgan & Claypool,

2009. On-line

version.

Dynamic Programming and Optimal Control, Volume II, Dimitri

P. Bertsekas, 4th edition, Athena Scientific, 2012.

Regret Analysis of Stochastic and Nonstochastic Multi-armed Bandit

Problems, Sébastien Bubeck and Nicolò Cesa-Bianchi, Foundations and

Trends in Machine Learning, Volume 5, Number 1,

2012. On-line

version.

Selected research papers.

-

A

Stochastic Approximation Method

Herbert Robbins and Sutton Monro, 1951

-

Probability

inequalities for sums of bounded random variables

Wassily Hoeffding, 1963

-

Asymptotically

Efficient Adaptive Allocation Rules

T. L. Lai and Herbert Robbins, 1985

-

Markov games as a framework for multi-agent reinforcment learning

Michael L. Littman, 1994

-

Foraging in an Uncertain Environment Using Predictive Hebbian Learning

P. Read Montague, Peter Dayan, and Terrence J. Sejnowski, 1994

-

On the Complexity of Solving Markov Decision Problems

Michael L. Littman, Thomas L. Dean, and Leslie Pack Kaelbling, 1995

-

Reinforcement Learning with Replacing Eligibility Traces

Satinder P. Singh and Richard S. Sutton, 1996.

-

Dopamine neurons report and error in the temporal prediction of reward during learning

Jeffrey R. Hollerman and Wolfram Schultz, 1998

-

Planning and acting in partially observable stochastic domains

Leslie Pack Kaelbling, Michael L. Littman, and Anthony R. Cassandra, 1998

-

Learning to Drive a Bicycle using Reinforcement Learning and Shaping

Jette Randløv and Preben Alstrøm, 1998

-

Policy invariance under reward transformations: Theory and application to reward shaping

Andrew Y. Ng, Daishi Harada, and Stuart Russell, 1999

-

Convergence

Results for Single-Step On-Policy Reinforcement-Learning Algorithms

Satinder Singh, Tommi Jaakkola, Michael L. Littman, and Csaba Szepesvári, 2000

-

Policy

Gradient Methods for Reinforcment Learning with Function

Approximation

Richard S. Sutton, David McAllester, Satinder Singh, and Yishay

Mansour, 2000.

-

Rational and Convergent Learning in Stochastic Games

Michael Bowling and Manuela Veloso, 2001

-

Finite-time

Analysis of the Multiarmed Bandit Problem

Peter Auer, Nicolò Cesa-Bianchi, and Paul Fischer, 2002

-

The Random-Facet Simplex Algorithm on Combinatorial Cubes

Bernd Gärtner, 2002

-

Nash Q-Learning For General-Sum Stochastic Games

Junling Hu and Michael P. Wellman, 2003.

-

Evolving Neural Networks through

Augmenting Topologies

Kenneth O. Stanley and Risto Miikkulainen, 2002

-

Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence

Pamela McCorduck, 2004

-

Action

Elimination and Stopping Conditions for the Multi-Armed Bandit and

Reinforcement Learning Problems

Eyal Even-Dar, Shie Mannor,

and Yishay Mansour, 2006

-

Bandit based Monte-Carlo Planning

Levente Kocsis and Csaba Szepesvári, 2006

-

If multi-agent learning is the answer, what is the question?

Yoav Shoham, Rob Powers, and Trond Grenager, 2006

-

Batch Reinforcement Learning in a Complex Domain

Shivaram Kalyanakrishnan and Peter Stone, 2007

-

Half Field Offense in RoboCup Soccer: A Multiagent Reinforcement Learning Case Study

Shivaram Kalyanakrishnan, Yaxin Liu, and Peter Stone, 2007

-

REGAL: A Regularization based Algorithm for Reinforcement Learning in

Weakly Communicating MDPs

Peter L. Bartlett and Ambuj Tewari, 2009.

-

Is it

Enough to Get the Behaviour Right?

Hector J. Levesque,

2009

-

Artificial Intelligence: A Modern Approach

Stuart Russell and Peter Norvig, 2009

-

UT Austin Villa 2011: A

Champion Agent in the RoboCup 3D Soccer Simulation Competition

Patrick MacAlpine, Daniel Urieli, Samuel Barrett, Shivaram

Kalyanakrishnan, Francisco Barrera, Adrian Lopez-Mobilia, Nicolae Ştiurcă, Victor Vu, and Peter Stone, 2012

-

The Quest for Artificial Intelligence: A History of Ideas and Achievements

Nils J. Nilsson, 2010

-

An Empirical Evaluation of Thompson Sampling

Olivier Chapelle and Lihong Li, 2011

-

The KL-UCB Algorithm for Bounded Stochastic Bandits and Beyond

Aurélien Garivier and Olivier Cappé, 2011

-

Learning Methods for Sequential Decision Making with Imperfect Representations

Shivaram Kalyanakrishnan, 2011

-

Thompson Sampling: An Asymptotically Optimal Finite-Time Analysis

Emilie Kaufmann, Nathaniel Korda, and Rémi Munos, 2012

-

Batch-Switching

Policy Iteration

Shivaram Kalyanakrishnan, Utkarsh Mall, and Ritish Goyal, 2016a

-

Randomised Procedures for Initialising and Switching Actions in Policy Iteration

Shivaram Kalyanakrishnan, Neeldhara Misra, and Aditya Gopalan, 2016b

-

Mastering the game of Go with deep neural networks and tree search

David Silver, Aja Huang, Chris J. Maddison, Arthur Guez, Laurent Sifre, George van den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, Sander Dieleman, Dominik Grewe, John Nham, Nal Kalchbrenner, Ilya Sutskever, Timothy Lillicrap, Madeleine Leach, Koray Kavukcuoglu, Thore Graepel, and Demis Hassabis, 2016

Communication

This page will serve as the primary source of information regarding

the course, the schedule, and related announcements. The Moodle page

for the course will be used for sharing resources for the lectures and

assignments, and also for recording grades.

E-mail is the best means of communicating with the instructor;

students must send e-mail with ``[CS747]'' in the header, with a copy

marked to the TAs.

Schedule

-

July 20: Welcome, Introduction to the course.

-

July 22: Multi-armed Bandits.

Reading: Sections 2–2.2, Sutton and Barto (1998); Chapter 1, Bubeck and Cesa-Bianchi (2012).

Summary: Coin-tossing game, definition of stochastic multi-armed bandit, definition of

algorithm, ε-greedy and ε-first algorithms, definition of regret.

-

July 27: Multi-armed Bandits.

Reading: Auer et al. (2002).

Summary: Graph of E[r^{t}] versus t, GLIE (Greedy in the Limit with

Infinite Exploration) property, Lai and Robbins's lower bound on

regret, UCB algorithm.

References: Section 2.2, Singh et al. (2000); Lai and Robbins (1985).

-

July 29: Multi-armed Bandits.

Reading: Wikipedia page

on Hoeffding's

Inequality.

Summary: Hoeffding's Inequality, first part of proof of regret bound for UCB (bounding the

probability of pulling a sub-optimal arm beyond a threshold number of pulls).

References: Theorem 2, Hoeffding (1963), Auer et al. (2002).

-

August 3: Multi-armed Bandits.

Reading: Sections 1–3, Garivier and Cappé (2011).

Summary: Second part of proof of regret bound for UCB (bounding the

expectation of the number of pulls of a sub-optimal arm), history of

theoretical results on stochastic bandits.

Reference: Auer et al. (2002).

-

August 5: Multi-armed Bandits.

Reading: Chapelle and Li (2011).

Summary: Revision of portion of UCB proof, Thompson Sampling,

discussion of bandit variations: adversarial setting, pure

exploration, modeling dependence between arms.

References: Kaufmann et al. (2012); Chapters 3–5, Bubeck and

Cesa-Bianchi (2012), Even-Dar et al. (2006).

-

August 10: MDP Planning.

Reading: Section 1, Kalyanakrishnan et al. (2016b).

Summary: Definition of Markov Decision Problem, policy, and value

function; representing MDPs through state transition diagrams;

existence of an optimal policy.

-

August 12: MDP Planning.

Reading: Chapter 3, Sutton and Barto (1998).

Summary: Unifying episodic and continuous tasks; ``design'' of states,

actions, and rewards; illustrative examples (chess, soccer, and

helicopter hovering); introduction to Bellman's Equations.

-

August 17: MDP Planning.

Reading: Chapter 4, Sutton and Barto (1998).

Summary: Solution of Bellman's Equations by variable elimination and

by iteration; Bellman operator and its fixed point; Bellman's

Optimality Equations; Bellman Optimality operator and its fixed

point.

-

August 19: MDP Planning.

Reading: Littman et al. (1995).

Summary: Value Iteration, Linear Programming formulation for MDP

planning, action value functions.

Reference: Gärtner (2002).

-

August 24: MDP Planning.

Reading: Section 2, Kalyanakrishnan et al. (2016b).

Summary: Policy Iteration, first part of proof of convergence and

correctness.

-

August 26: MDP Planning.

Reading: Chapter 4, Sutton and Barto (1998).

Summary: Second part of proof of convergence and correctness, upper

and lower bounds for Policy Iteration.

Reference: Section 2, Kalyanakrishnan et al. (2016a); Section 2,

Kalyanakrishnan et al. (2016b).

-

August 31: Agency, Intelligence, and Learning.

Summary: Notions of intelligence and artificial intelligence, summary

of developments up to 1956.

References: McCorduck (2004), Nilsson (2010).

-

September 2: Agency, Intelligence, and Learning.

Summary: Summary of developments in AI starting 1956.

References: Chapter 1, Russell and Norvig (2009); Levesque (2009).

-

September 10: Mid-semester examination. 8.30 a.m. – 10.30 a.m., 101/103/105 New CSE Building.

-

September 14: Reinforcement learning.

Reading: Introduction to Chapter 5, Sutton and Barto (1998).

Summary: The Reinforcement Learning Problem; Ergodic, Unichain, and Communicating MDPs; model-based learning algorithm for acting optimally in the limit.

Reference: Section 1, Bartlett and Tewari (2009).

-

September 16: Reinforcement learning.

Reading: Chapter 5, Sutton and Barto (1998).

Summary: Monte Carlo policy evaluation, first-visit and every-visit

Monte Carlo methods, PAC policy evaluation.

Reference: Sutton and Singh (1996), Class Note 1 (see Moodle).

-

September 21: Reinforcement learning.

Summary: On-line implementation of Monte Carlo policy evaluation,

bootstrapping, TD(0) algorithm.

Reference: Robbins and Monro (1951).

-

September 23: Reinforcement learning.

Reading: Sections 6.1–6.5, Sutton and Barto (1998).

Summary: Maximum likelihood estimates and least squares estimates,

convergence of batch TD(0) and batch TD(1), temporal difference

learning for control.

-

September 28: Reinforcement learning.

Reading: Chapter 7 (except Section 7.7), Sutton and Barto (1998).

Summary: TD learning in animals, TD(lambda) algorithm.

References: Wikipedia

page on Classical Conditioning; Montague, Dayan, and Sejnowski

(1994); Hollerman and Schultz (1998).

-

September 30: Reinforcement learning, Case Study.

Summary: Sarsa(0) and Q(0), RoboCup: A Grand Challenge for AI.

References: Kalyanakrishnan et al. (2007), MacAlpine et al. (2012).

-

October 5: Reinforcement learning.

Reading: Sections 8.1–8.3, Sutton and Barto (1998).

Summary: Need for generalisation and function approximation, Linear TD(lambda).

-

October 7: Reinforcement learning.

Reading: Sections 8.4–8.7, Sutton and Barto (1998).

Summary: Tsitsiklis and Van Roy's counter-example, divergence of

off-policy bootstrapping with linear function approximation.

-

October 14: Reinforcement learning.

Reading: Section 8.3.2, Sutton and Barto (1998).

Summary: Geometric view of linear function approximation, tile

coding.

-

October 19: Reinforcement learning.

Reading: Chapter 4, Kalyanakrishnan (2011).

Summary: Policy search, comparison with value function-based methods,

Tetris as an illustrative example.

-

October 21: Reinforcement learning.

Reading: Introduction and Section 1, Sutton et al. (2000); Chapter 9,

Sutton and Barto (1998); Kalyanakrishnan and Stone (2007).

Summary: Policy gradient methods, model-based reinforcement learning,

batch reinforcement learning.

-

October 26: Reinforcement learning.

Reading: Silver et al. (2016).

Summary: AlphaGo, Monte Carlo Tree Search.

Reference: Kocsis and Szepesvári (2006).

-

October 28: Multi-agent learning.

Reading: Littman (1994).

Summary: Matrix games, Minimax-optimality, Markov games, Minimax-Q algorithm.

References: Bowling and Veloso (2001), Hu and Wellman (2003), Shoham et al. (2006).

-

November 2: Miscellaneous topics.

Summary: POMDPs, neuro-evolution, reward shaping.

References: Kaelbling et al. (1998), Randløv and Alstrøm (1998), Stanley and Miikkulainen (2002), Ng et al. (1999).

Assignments

-

August 16: Programming assignment 1 announced on

Moodle. Submission deadline: 11.55 p.m., September 1, 2016.

-

September 16: Programming assignment 2 announced on

Moodle. Submission deadline: 11.55 p.m., September 25, 2016.

-

September 30: Programming assignment 3 announced on

Moodle. Submission deadline:

11.55 p.m., October 10, 2016 11.55 p.m., October 12, 2016.

-

October 15: Programming assignment 4 announced on

Moodle. Submission deadline: 11.55 p.m., October 25, 2016.

-

October 16: Final project announced on Moodle. Submission

deadline: 11.55 p.m., November 15, 2016.