Abstract

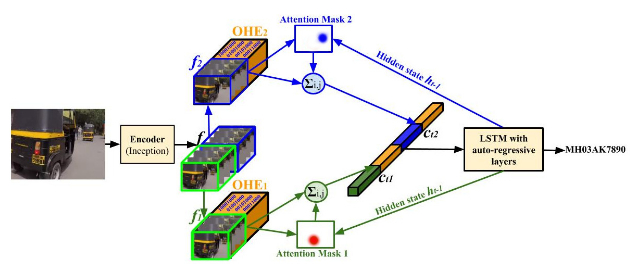

We work on the problem of recognizing license plates and street signs automatically in challenging conditions such as chaotic traffic. We leverage state-of-the-art text spotters to generate a large amount of noisy labeled training data. We train two different models for recognition. Our baseline is a conventional Convolution Neural Network (CNN) encoder followed by a Recurrent Neural Network(RNN) decoder. As our first contribution, we bypass the detection phase by augmenting the baseline with an Attention mechanism in the RNN decoder. Next, we build in the capability of training the model end-to-end on scenes containing license plates by incorporating an inception based CNN encoder that makes the model robust to multiple scales. We achieve improvements of 3.75% at the sequence level, over the baseline model. We present the first results of using multi-headed attention models on text recognition in images and illustrate the advantages of using multiple heads over a single head. We observe gains as large as 7.18 percent from incorporating multi-headed attention. We also experiment with multi-headed attention models on French Street Name Signs dataset (FSNS) and a new Indian Street dataset that we release for experiments. We observe that such models with multiple attention masks perform better than the model with single-headed attention on three different datasets with varying complexities. Our models outperform state-of-the-art methods on FSNS and IIIT-ILST Devanagari datasets by 1.1% and 8.19% respectively.

Other related papers are:

- StreetOCRCorrect: An Interactive Framework for OCR Corrections in Chaotic Indian Street Videos

Pankaj Singh, Bhavya Patwa, Rohit Saluja, Ganesh Ramakrishnan, Parag Chaudhuri Workshop on Open Source Tools (OST) at the International Conference on Document Analysis and Recognition (ICDAR) 2019, Sydney, Australia.