- Annual Progress Seminar 5

Overview: Photo-realistic computer graphics attempts to match as closely as possible the

rendering of a virtual scene with an actual photograph of the scene had it existed

in the real world. Of the several techniques that are used to achieve this goal,

physically-based approaches (i.e. those that attempt to simulate the actual

physical process of illumination) provide the most striking results.

My thesis is on the problem of producing global illumination (GI) solution for point models, which

happens to be a photo-realistic, physically-based approach central to computer

graphics. For example, the three-dimensional point models may be

scans of cultural heritage structures and we may wish to

view them in a virtual museum under various lighting conditions.

CHALLENGES: The point clouds of interest are not solitary models, but may consist

of hard to segment entities thereby inhibiting a surface reconstruction algorithm that

produces meshes. Further, the global illumination algorithm should handle point models with

any of diffuse or specular material properties, there by capturing all known light reflections,

namely, diffuse reflections, specular reflections, specular refractions and caustics.

Abstract: Point-sampled geometry has gained significant interest due to their simplicity.

The lack of connectivity touted as a plus, however, creates difficulties in many

operations like generating global illumination effects. This becomes especially

true when we have a complex scene consisting of several models, the data for which

is available as hard to segment aggregated point-based models. Inter-reflections

in such complex scenes requires knowledge of visibility between point pairs.

Computing visibility for point models becomes all the more difficult, than for

polygonal models, since we do not have any surface or object information.

Point-to-Point Visibility is arguably one of the most difficult

problems in rendering since the interaction between two primitives

depends on the rest of the scene. One way to reduce the difficulty is

to consider clustering of regions such that their mutual visibility is

resolved at a group level. Most scenes admit clustering, and the

Visibility Map data structure we propose enables efficient answer to

common rendering queries.

V-Map is used in a diffuse global illumination solution using FMM on point models.

Both the V-Map as well as the FMM algorithm are implemented in parallel on the GPU

which reported efficient speed-ups. V-Map is used here to solve point-to-point

view independent visibility queries between octree nodes.

We have then extended V-Map to trace caustic photons through the point model scene and

capture them on the target diffuse surfaces. The visible list (part of the V-Map) of

the light source (and its ancestors) assisted in

sending rays only to visible specular leaf nodes. This accounts for a lot

of time-saving, as we don't have to send packets of rays in every

direction from the light source searching for the probable caustic

generators. Normal fields are generated for each

specular leaf so as to enable accurate secondary ray directions. The factors like

number of photons to be send, and where to send are set in sync with the emissive power

of the light source and the area occupied by the splat as seen from the light source

(using the subtended solid angle). Kd-tree is used to organize the caustic photon map,

thereby enabling fast caustic photon retrieval during ray-tracing.

We use an efficient, but naive, octree traversal algorithm (on GPU) to do

photon tracing as well as ray-trace rendering. The texture cache on GPU is used

for efficient octree storage and fast traversal through it, to get answers to

first-object intersection queries for ray-tracing. Point data is also organized

into a 1D texture on GPU memory, directly accessible by leaves of the octree.

Provision for multiple leaves per splat is added to avoid undesirable holes and artifacts

during ray-trace rendering. Coherent rays and super-sampling is performed to aid a well

behaved warp on GPU and avoid aliasing artifacts.

As the diffuse global illumination solution is

view-independent, it provides us with an advantage of having an interactive walk-through of the input

scene of point models. However, specular effects being view-dependent needs to be calculated for every new

view-point in the ray-trace rendered frame. Thus, if specular effect generation takes a lot of time, we

loose out of having an interactive walk-through of the scene. We desire not to loose this advantage, and try

to give a fast time-efficient algorithm for specular effects generation.

Issues pertaining to shading to be applied to specular glossy objects, blending of color in case a

ray hits multiple splats in an octree-leaf, blending of color for a single pixel in case of

super-sampling, handling semi-transparent and reflective plus refractive objects, setting up

various material properties etc. needs to be handled. We can say the base system is ready, only

some tweaks and make-ups remain to make the output image beautiful and visually pleasing.

The diffuse global illumination and ray-tracing systems have been correctly merged so as to give a

complete global illumination package for point models. We, thus, have a \emph{two--pass global

illumination solver for point models}. The input to the system is a scene consisting of both

diffuse and specular point models. First pass calculates the diffuse illumination maps,

followed by the second pass for specular effects. Finally, the scene is rendered using our

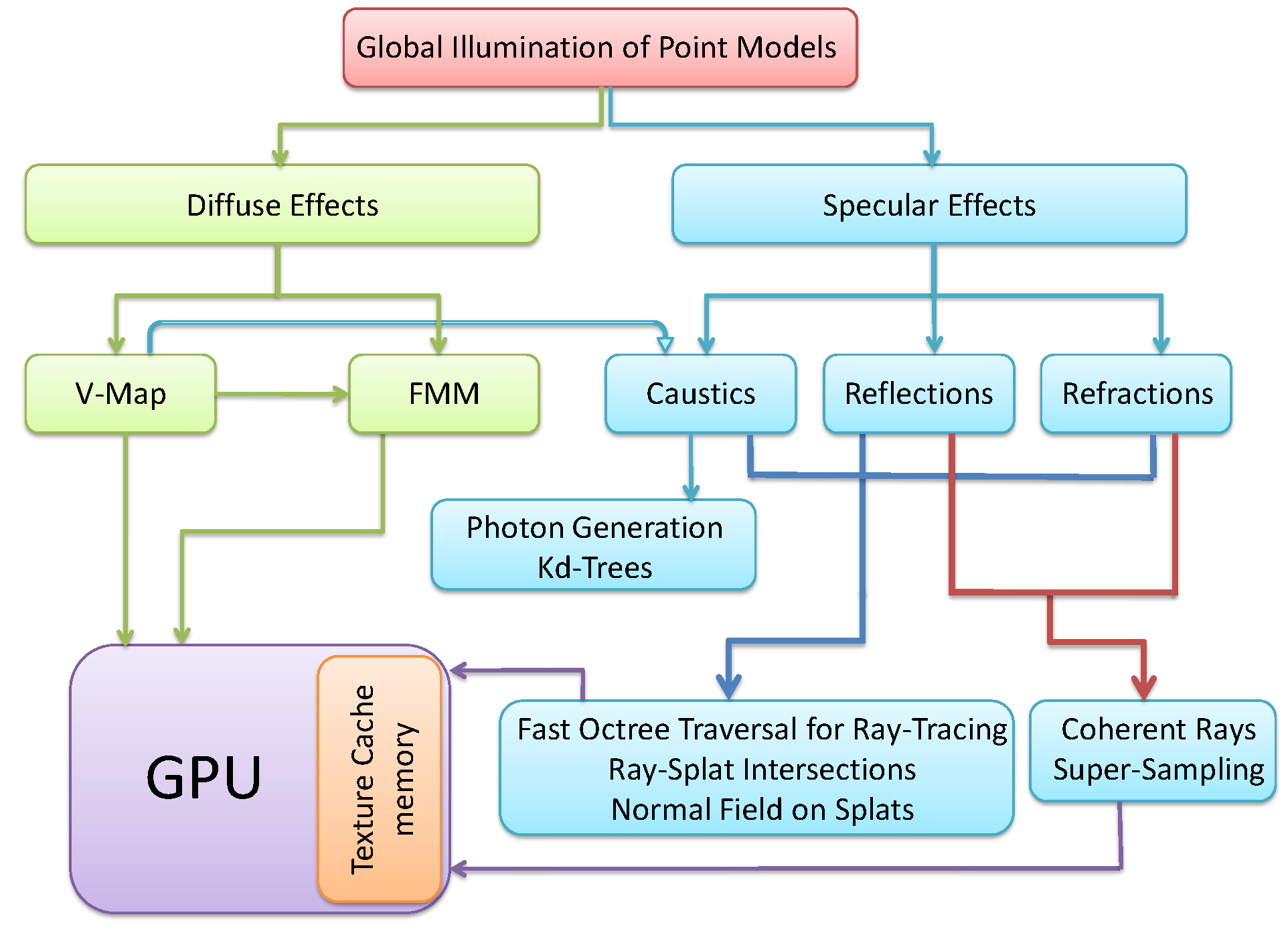

ray-tracing technique. As my last note, I would like to provide, for your reference, a

simple work-flow of the work done by me during the entire period of my Ph.d

and how each module gels with the other to give a complete story to my thesis.

The following figure gives a pictorial view of the work-flow of my thesis.

[report] [presentation]

- Annual Progress Seminar 4

Abstract: Advances in scanning technologies and rapidly growing complexity

of geometric objects motivated the use of point-based geometry

as an alternative surface representation, both for efficient

rendering and for flexible geometry processing of highly complex

3D-models. Based on their fundamental simplicity,

points have motivated a variety of research on topics such as shape

modeling, object capturing, simplification, rendering and hybrid

point-polygon methods.

Global Illumination for point models is an upcoming and an

interesting problem to solve. We use the Fast Multipole Method (FMM), a robust technique

for the evaluation of the combined effect of pairwise interactions of $n$

data sources, as the light transport kernel for inter-reflections, in

point models, to compute a description -- \textbf{illumination maps} -- of the

diffuse illumination. FMM, by itself, exhibits high amount of parallelism to be

exploited for achieving multi-fold speed-ups.

Graphics Processing Units (GPUs), traditionally designed for performing

graphics specific computations, now have fostered considerable interest in doing

computations that go beyond computer graphics; general purpose computation on GPUs,

or ``GPGPU''. GPUs may be viewed as data parallel compute co-processors that can provide

significant improvements in computational performance especially for algorithms which

exhibit sufficiently high amount of parallelism. One such algorithm is the Fast Multipole Method (FMM).

This report describes in detail the strategies for parallelization of all phases of the FMM and discusses

several techniques to optimize its computational performance on GPUs.

The heart of FMM lies in its clever use of its underlying data structure, the Octree. We present

a novel algorithm for constructing octrees in parallel on GPUs which will eventually be combined with the

GPU-based parallel FMM framework.

Correct global illumination results for point models require knowledge

of mutual point-pair visibility. \textbf{Visibility Maps (V-maps)} have been designed for

the same. Parallel implementation of V-map on GPU offer considerable performance

improvements and has been detailed in this report.

A complete global illumination solution for point models should cover both diffuse

and specular (reflections, refractions, and caustics) effects. Diffuse global illumination

is handled by generating illumination maps. Achieving specular effects is a part of the work to

be done in future.

[report] [presentation]

- Annual Progress Seminar 3

Abstract: Advances in scanning technologies and rapidly growing complexity of geometric objects motivated the use of point-based geometry as an alternative surface representation, both for efficient rendering and for flexible geometry processing of highly complex 3D-models. Traditional geometry based rendering methods use triangles as primitives which make rendering complexity dependent on complexity of the model to be rendered. But point based models overcome that problem as points don't maintain connectivity information and just represents surface information. Based on their fundamental simplicity, points have motivated a variety of research on topics such as shape modeling, object capturing, simplification, rendering and hybrid point-polygon methods.

Global Illumination for point models is an upcoming and an interesting problem to solve. The lack of connectivity touted as a plus, however, creates difficulties in generating global illumination effects. This becomes especially true when we have a complex scene consisting of several models, the data for which is available as hard to segment aggregated point-models. Inter-reflections in such complex scenes requires knowledge of visibility between point pairs. Computing visibility for points is all the more difficult (as compared to polygonal models), since we do not have any surface/object information. In this report we present, a novel, hierarchical, fast and memory efficient algorithm to compute a description of mutual visibility in the form of a visibility map. Ray shooting and visibility queries can be answered in sub-linear time using this data structure. We evaluate our scheme analytically, qualitatively, and quantitatively and conclude that these maps are desirable.

We use the Fast Multipole Method (FMM), a robust technique for the evaluation of the combined effect of pairwise interactions of n data sources, as the light transport kernel for inter-reflections, in point models, to compute a description -- illumination maps -- of the diffuse illumination. Parallel computation of the FMM is considered a challenging problem due to the dependence of the computation on the distribution of the data sources, usually resulting in dynamic data decomposition and load balancing problems. We present, in this report, an algorithm for parallel implementation of FMM, which does not require any dynamic data decomposition and load balancing steps. We, further, also provide necessary hints to implement a similar algorithm on a Graphics Processing Unit (GPU) as a ``GPGPU'' application.

A complete global illumination solution for point models should cover both diffuse and specular (reflections, refractions, and caustics) effects. Diffuse global illumination is handled by generating illumination maps. For specular effects, we use the Splat-based Ray Tracing technique for handling reflections and refractions in the scene and generateCaustic Maps using optimized Photon generation and tracing algorithms. We further discuss a time-efficient kNN query solver required for fast retrieval of caustics photons while ray-traced rendering.

[report] [presentation]

- Annual Progress Seminar 2

Abstract: Point-based methods have gained significant interest due to their simplicity. The lack of connectivity touted as a plus, however, creates difficulties in generating global illumination effects. We are interested in looking at inter-reflections in complex scenes consisting of several models, the data for which are available as hard to segment aggregated point-based models.

In this report we use the Fast Multipole Method (FMM) which has a natural point based basis, and the light transport kernel for inter-reflection to compute a description -- illumination maps -- of the diffuse illumination. These illumination maps may be subsequently rendered using methods in the literature. We present a hierarchical visibility determination module suitable for point based models.

[report] [presentation]

- Annual Progress Seminar 1

Abstract: The need for mosaicing arises when we want to stitch two or more images together so as to view them as a single continuous image. There are various ways to construct mosaics of images, one of them being Spherical Mosaics. As the name suggests, it allows any number of images to be merged into a single, seamless view, simulating the image that would be acquired by a camera with a spherical field of view.

In this report we focus on an algorithm for constructing spherical mosaics from a collection of images taken from a common optical center. Partially overlapping images, an adjacency map relating the images, initial estimates of the rotations relating each image to a specified base image, and approximate internal calibration information for the camera form the inputs for the algorithm. The algorithm's output is a rotation relating each image to the base image and revised estimates of the camera's internal parameters. We also compare two different optimization techniques ( local and global ) and show why global optimization technique is much more superior to the local one.

We study the details of this algorithm and also provide the details of our work in this area which overcomes some limitations of the algorithm available in literature

[report] [presentation]