CS 747: Foundations of Intelligent and Learning Agents

(Autumn 2018)

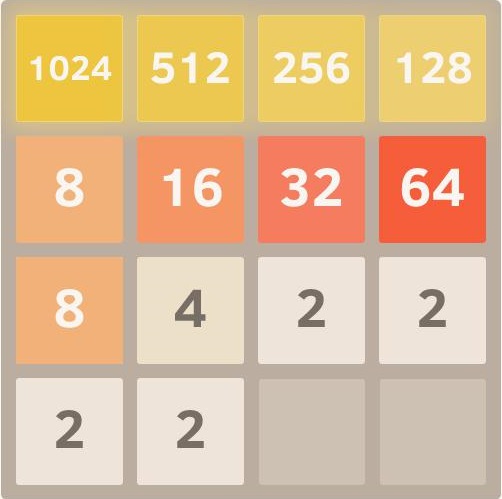

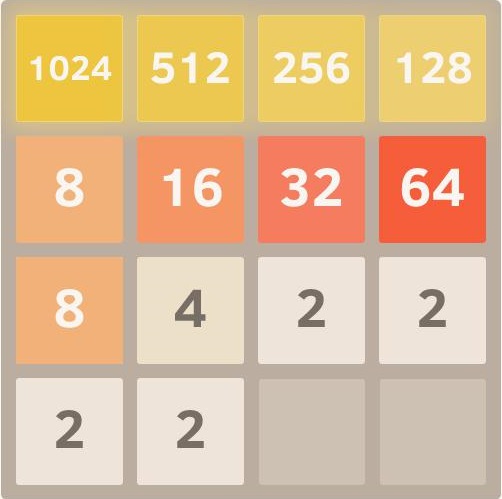

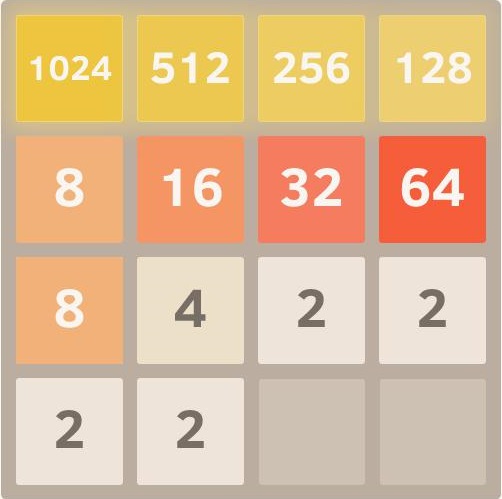

(Picture source: https://www.softwire.com/blog/wp-content/uploads/2014/07/2048Example.jpg)

Instructor

Shivaram Kalyanakrishnan

Office: Room 220, New CSE Building

Phone: 7704

E-mail: shivaram@cse.iitb.ac.in

Teaching Assistants

Sabyasachi Ghosh

E-mail: 174050001@iitb.ac.in

Akshay Arora

E-mail: 173050021@iitb.ac.in

Rahul Sharma

E-mail: 173050019@iitb.ac.in

Parth Patel

E-mail: 173050032@iitb.ac.in

Dhaval Limdiwala

E-mail: 173050061@iitb.ac.in

Class

Lectures will be held in LH 302, Lecture Hall Complex during Slot 6:

11.05 a.m. – 12.30 p.m. Wednesdays and Fridays.

Office hours will immediately follow class and be up to 1.15

p.m. on Wednesdays and Fridays. Meetings can also be arranged by

appointment.

Course Description

Today's computing systems are becoming increasingly adaptive and

autonomous: they are akin to intelligent, decision-making

"agents". With its roots in artificial intelligence and machine

learning, this course covers the foundational principles of designing

such agents. Topics covered include: (1) agency, intelligence, and

learning; (2) exploration and multi-armed bandits; (3) Markov Decision

Problems and planning; (4) reinforcement learning; (5) multi-agent

systems and multi-agent learning; and (6) case studies.

The course will adopt a "hands-on" approach, with programming

assignments designed to highlight the relationship between theory and

practice. Case studies, as well as invited talks from experts, will

offer an "end-to-end" view of deployed agents. It is hoped that

students can apply the learnings from this course to the benefit of

their respective pursuits in various areas of computer science and

related fields.

Prerequisites

The course is open to all Ph.D. students, all masters students, and

undergraduate/dual-degree students in their fourth (or higher) year of

study.

The course does not formally have other courses as

prerequisites. However, class lectures and assignments will assume

that the student is comfortable with probability and

algorithms. Introduction

to Probability by Grinstead and Snell is an excellent resource on

basic probability. Any student who is not comfortable with the

contents of chapters 1 through 7 (and is unable to solve the

exercises) is advised against taking CS 747.

The course has an intensive programming component: based on ideas

discussed in class, the student must be able to independently design,

implement, and evaluate programs in a language of his/her choice. The

student must be prepared to spend a significant amount of time on the

programming component of the course.

Evaluation

Grades will be based on four programming assignments, each

worth 10 marks; a course project worth 20 marks; a mid-semester

examination worth 15 marks; and an end-semester examination worth 25

marks.

The programming assignments and project must be turned in through

Moodle. Late submissions will not be evaluated; they will receive no

marks.

Students auditing the course must score 50 or more marks in the

course to be awarded an "AU" grade.

Academic Honesty

Students are expected to adhere to the highest standards of

integrity and academic honesty. Acts such as copying in the

examinations and sharing code for the programming

assignments will be dealt with strictly, in accordance with the

institute's procedures

and disciplinary

actions for academic malpractice.

Texts and References

Reinforcement Learning: An Introduction, Richard S. Sutton and

Andrew G. Barto, 2nd edition

2018. On-line

version.

Algorithms for Reinforcement Learning, Csaba Szepesvári,

Morgan & Claypool,

2009. On-line

version.

Selected research papers.

-

A

Stochastic Approximation Method

Herbert Robbins and Sutton Monro, 1951

-

Asymptotically

Efficient Adaptive Allocation Rules

T. L. Lai and Herbert Robbins, 1985

-

Q-learning

Christopher J. C. H. Watkins and Peter Dayan, 1992

-

Foraging in an Uncertain Environment Using Predictive Hebbian Learning

P. Read Montague, Peter Dayan, and Terrence J. Sejnowski, 1994

-

Average Reward Reinforcement Learning: Foundations, Algorithms, and Empirical Results

Sridhar Mahadevan, 1996

-

Dopamine neurons report and error in the temporal prediction of reward during learning

Jeffrey R. Hollerman and Wolfram Schultz, 1998

-

Learning to Drive a Bicycle using Reinforcement Learning and Shaping

Jette Randløv and Preben Alstrøm, 1998

-

Policy invariance under reward transformations: Theory and application to reward shaping

Andrew Y. Ng, Daishi Harada, and Stuart Russell, 1999

-

Algorithms for Inverse Reinforcement Learning

Andrew Y. Ng and Stuart Russell, 2000

-

Convergence

Results for Single-Step On-Policy Reinforcement-Learning Algorithms

Satinder Singh, Tommi Jaakkola, Michael L. Littman, and Csaba Szepesvári, 2000

-

Finite-time

Analysis of the Multiarmed Bandit Problem

Peter Auer, Nicolò Cesa-Bianchi, and Paul Fischer, 2002

-

Tree-based Batch Mode Reinforcement Learning

Damien Ernst, Pierre Geurts, and Louis Wehenkel, 2005

-

Bandit based Monte-Carlo Planning

Levente Kocsis and Csaba Szepesvári, 2006

-

Learning Tetris Using the Noisy Cross-Entropy Method

István Szita and András Lőrincz, 2006

-

Half

Field Offense in RoboCup Soccer: A Multiagent Reinforcement Learning

Case Study

Shivaram Kalyanakrishnan, Yaxin Liu, and Peter Stone, 2007

-

Batch Reinforcement Learning

in a Complex Domain

Shivaram Kalyanakrishnan and Peter Stone, 2007

-

An Empirical Evaluation of Thompson Sampling

Olivier Chapelle and Lihong Li, 2011

-

The KL-UCB Algorithm for Bounded Stochastic Bandits and Beyond

Aurélien Garivier and Olivier Cappé, 2011

-

Learning Methods for Sequential Decision Making with Imperfect Representations

Shivaram Kalyanakrishnan, 2011

-

Human-level control through deep reinforcement learning

Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel

Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas

K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles Beattie, Amir

Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan

Wierstra, Shane Legg, and Demis Hassabis, 2015

-

Mastering the game of Go with deep neural networks and tree search

David Silver, Aja Huang, Chris J. Maddison, Arthur Guez, Laurent Sifre, George van den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, Sander Dieleman, Dominik Grewe, John Nham, Nal Kalchbrenner, Ilya Sutskever, Timothy Lillicrap, Madeleine Leach, Koray Kavukcuoglu, Thore Graepel, and Demis Hassabis, 2016

-

Mastering the game of Go without human knowledge

David Silver, Julian Schrittwieser, Karen Simonyan, Ioannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, Yutian Chen, Timothy Lillicrap, Fan Hui, Laurent Sifre, George van den Driessche, Thore Graepel, and Demis Hassabis, 2017.

-

Communication

This page will serve as the primary source of information regarding

the course, the schedule, and related announcements. The Moodle page

for the course will be used for sharing resources for the assignments,

and also for recording grades.

E-mail is the best means of communicating with the instructor;

students must send e-mail with "[CS747]" in the header, with a copy

marked to the TAs.

Schedule

-

July 18: Welcome; Introduction to the course; Coin-tossing game.

-

July 20: Multi-armed Bandits.

Reading: Sections 2, 2.1, 2.2, 2.3, Sutton and Barto (2018).

Summary: Definition of stochastic multi-armed bandit; Definition of

algorithm, ε-first and ε-greedy algorithms; Graph of

E[rt] versus t.

-

July 25: Multi-armed Bandits.

Reading: Section 1, Figure 1, Theorem 1, Auer et al. (2002).

Summary: Definition of regret; Achieving sublinear regret with

εt-greedy sampling; Lai and Robbins's lower bound

on regret; UCB algorithm.

Reference: Theorem 1, Lai and Robbins (1985).

-

July 27: Multi-armed Bandits.

Reading: Wikipedia page

on Hoeffding's

Inequality; Sections 1–3, Garivier and Cappé (2011);

Chapelle and Li (2011).

Summary: Hoeffding's Inequality; KL-UCB; Thompson Sampling.

-

August 1: Multi-armed Bandits.

Reading: Slides.

Summary: Proof of upper bound on the regret of UCB.

-

August 3: Markov Decision Problems.

Reading: Chapter 3, Sutton and Barto (2018).

Summary: Definition of Markov Decision Problem, policy, and value

function; representing MDPs through state transition diagrams;

existence of optimal policy.

Reference: MDP example used in class.

-

August 8: Markov Decision Problems.

Summary: Reward models (infinite discounted, total, finite horizon,

average); Episodic and continuing tasks; Discounting; Existence of

optimal policy; Derivation of Bellman's Equations.

Reference: Section 2.2, Mahadevan (1996).

-

August 10: Markov Decision Problems.

Reading: Appendix A, Szepesvári (2009).

Summary: Bellman's Optimality Equations; Bellman Operator and

Bellman Optimality Operator; Banach's Fixed Point Theorem; Proof of

contraction under max norm.

-

August 17: Markov Decision Problems.

Reading: Slides.

Summary: Value Iteration; Linear Programming for MDP Planning; Policy Improvement Theorem and Policy Iteration.

-

August 24: Reinforcement Learning.

Reading: Class Note 1.

Summary: The Reinforcement Learning problem; Assumption on reachability of states; Model-based learning algorithm for acting optimally in the limit.

-

August 29: Reinforcement Learning.

Reading: Sections 5, 5.1, 5.2, 5.3, Sutton and Barto (2018); Class Note 2.

Summary: Monte Carlo prediction; First Visit and Every Visit Monte Carlo methods; Monte Carlo ES algorithm for control.

-

August 31: Reinforcement Learning.

Reading: Sections 6, 6.1, 6.2, 6.3, Sutton and Barto (2018).

Summary: Monte Carlo prediction; First Visit and Every Visit Monte

Carlo methods; Monte Carlo ES algorithm for control.

Reference: Robbins and Monro (1951).

-

September 5: Reinforcement Learning.

Reading: Sections 7, 7.1, Sutton and Barto

(2018); TD(λ)

algorithm (Sutton and Barto, 1998).

Summary: n-step returns and λ-return; TD(λ) algorithm;

TD learning in animals.

References: Wikipedia

page on Classical Conditioning; Montague, Dayan, and Sejnowski

(1994); Hollerman and Schultz (1998).

-

September 7: Reinforcement Learning.

Reading: Sections 6.4, 6.5, 6.6, Sutton and Barto (2018).

Summary: Model-free control: Sarsa, Q-learning, Expected Sarsa; Need

for generalisation in practice; soccer as illustrative example.

References: Watkins and Dayan (1992); Singh et al. (2000); Section

2.1, Kalyanakrishnan et al. (2007).

-

September 12: Mid-semester examination.

-

September 19: Reinforcement Learning.

Reading: Sections 9, 9.1, 9.2, 9.3, 9.4, 11.4, Sutton and Barto

(2018).

Summary: Linear TD(λ); Geometric view of linear function

approximation.

-

September 26: Reinforcement Learning.

Reading: Sections 9.5, 10, 10.1, 10.2, 11, 11.1, 11.2, 11.3, Sutton and

Barto (2018).

Summary: Tsitsiklis and Van Roy's counter-example; divergence of

off-policy bootstrapping with linear function approximation; Problems

with function approximation for control; Tile coding.

-

September 28: Reinforcement Learning.

Reading: Chapter 4, Kalyanakrishnan (2011).

Summary: Tile coding (continued); Illustration of the effect of

representation on Value Function-based methods and Policy Search

methods in the game of Tetris.

References: Szita and Lőrincz (2006).

-

October 3: Reinforcement Learning.

Reading: Sections 3.1, 3.2.2, 3.3.5, Kalyanakrishnan (2011).

Summary: Policy Search methods: Hill Climbing, Cross-entropy method,

CMA-ES; strengths and weaknesses of Policy Search methods.

-

October 5: Reinforcement Learning.

Reading: Sections 13, 13.1, 13.2, Sutton and Barto (2018).

Summary: Policy gradient methods, Policy gradient theorem.

-

October 10: Reinforcement Learning.

Reading: Sections 13.3, 13.4, 13.5, Sutton and Barto (2018).

Summary: REINFORCE; REINFORCE with a baseline; Actor-critic methods.

-

October 12: Invited talk by Harshad Khadilkar: RL

applications in the real world: Some use cases in industrial

operations.

-

October 17: Reinforcement Learning.

Reading: Sections 8, 8.1, 8.2, 8.3, 8.4, Sutton and Barto (2018).

Summary: Model-based Reinforcement Learning; Dyna-Q; Batch Reinforcement Learning; Experience Replay.

-

October 24: Reinforcement Learning.

Reading: Sections 8.5, 8.6, 8.7, Sutton and Barto (2018); Kalyanakrishnan and Stone (2007).

Summary: Fitted Q Iteration; Trajectory Sampling; Full and sample backups.

Reference: Ernst, Geurts, and Wehenkel (2005).

-

October 26: Reinforcement Learning.

Reading: Sections 8.8, 8.9, 8.10, 8.11, Sutton and Barto (2018)

Summary: Real-time Dynamic Programming, Roll-out Algorithms; Monte Carlo Tree Search (UCT).

Reference: Kocsis and Szepesvári, 2006

-

October 31: Reinforcement Learning.

Reading: Silver et al. (2016).

Summary: DQN algorithm on ATARI games; AlphaGo; AlphaGo Zero; AlphaZero.

Reference: Mnih et al. (2015); Silver et al. (2017); Wikipedia entry on AlphaZero.

-

November 2: Reward shaping; Inverse Reinforcement Learning.

Reading: Randløv and Alstrøm (1998); Ng et al. (1999); Ng and Russell (2000).

Summary: Purpose of shaping rewards; bicycle control as example; necessary and sufficient conditions for shaping rewards; Inverse reinforcement learning problem; non-uniqueness of reward function, LP formulation.

-

November 9: Invited talk by Ankur A. Kulkarni: Introduction to Game Theory.

-

November 14: End-semester examination.

Assignments

-

Programming Assignment 1 (10 marks).

Announced August 8, 2018; due 11.55 p.m., August 19, 2018.

-

Programming Assignment 2 (10 marks).

Announced August 30, 2018; due 11.55 p.m.,September 9, 2018.

-

Programming Assignment 3 (10 marks).

Announced September 26, 2018; due 11.55 p.m., October 7, 2018.

-

Project Proposal (5 marks).

Announced September 28, 2018; due 11.55 p.m., October 18, 2018.

-

Project (15 marks).

Announced October 24, 2018; due 11.55 p.m., November 23, 2018.

-

Programming Assignment 4 (10 marks).

Announced October 30, 2018; due 11.55 p.m., November 11, 2018.