CS 747: Foundations of Intelligent and Learning Agents

(Autumn 2022)

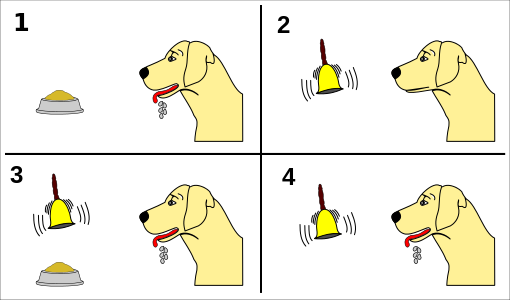

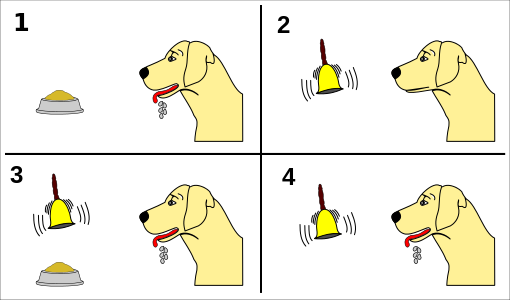

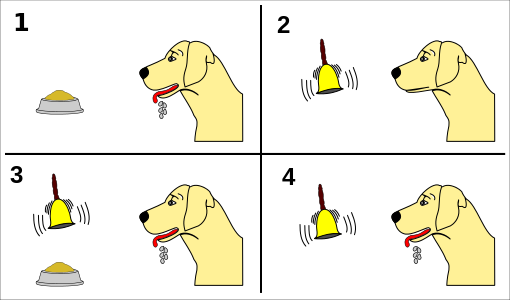

(Picture source: Maxxl2, CC BY-SA 4.0, via Wikimedia Commons)

(Page last edited .)

Instructor

Shivaram Kalyanakrishnan

Office: Room 220, New CSE Building

Phone: 7704

E-mail: shivaram@cse.iitb.ac.in

Teaching Assistants

Santhosh Kumar G.

E-mail: santhoshkg@iitb.ac.in

Ashutosh Sathe

E-mail: absathe@cse.iitb.ac.in

Aishwarya Mishra

E-mail: 213050010@iitb.ac.in

Vibhav Aggarwal

E-mail: vibhav.agg@iitb.ac.in

Adit Akarsh

E-mail: 19d070003@iitb.ac.in

Thomas Jacob

E-mail: 190070068@iitb.ac.in

Bhavini Jeloka

E-mail: bhavini_jeloka@iitb.ac.in

Aaron Jerry Ninan

E-mail: 190100001@iitb.ac.in

Sheel Shah

E-mail: 19d070052@iitb.ac.in

Meetings

Lectures will be held during Slot 14: 5.30 p.m. – 6.55

p.m. Tuesdays and Fridays in LA 001. The instructor will be

available for consultation immediately following class, up to 7.30

p.m., on both Tuesdays and Fridays. He will also hold office hours

(220, New CSE Building) 11.00 a.m. - 12.00 p.m. Wednesdays.

Course Description

Today's computing systems are increasingly adaptive and autonomous:

they are akin to intelligent, decision-making "agents". With its roots

in artificial intelligence and machine learning, this course covers

the foundational principles of designing such agents. Topics covered

include: (1) agency, intelligence, and learning; (2) exploration and

multi-armed bandits; (3) Markov Decision Problems and planning; (4)

reinforcement learning; (5) multi-agent systems and multi-agent

learning; and (6) case studies.

The course will adopt a "hands-on" approach, with programming

assignments designed to highlight the relationship between theory and

practice. Case studies will offer an end-to-end view of deployed

agents. It is hoped that students can apply the learnings from this

course to the benefit of their respective pursuits in various areas of

computer science and related fields.

Prerequisites

The course is open to all Ph.D. students, all masters students, and

undergraduate/dual-degree students in their fourth or higher year of

study. The course is also open to undergraduate/dual-degree students

in their third year of study, provided their CPI is 8.50 or

higher. The instructor regrets having to deny registration to many

interested undergraduate/dual-degree students in their third year; the

restriction is necessary to keep the class strength within the

capacity of the largest available classroom on campus.

The course does not formally have other courses as

prerequisites. However, lectures and assignments will assume that the

student is comfortable with probability and

algorithms. Introduction

to Probability by Grinstead and Snell is an excellent resource on

basic probability. Any student who is not comfortable with the

contents of chapters 1 through 7 (and is unable to solve the

exercises) is advised against taking CS 747.

The course has an intensive programming component: based on ideas

discussed in class, the student must be able to independently design,

implement, and evaluate programs in python. The student

must be prepared to spend a significant amount of time on the

programming component of the course.

Students who are unsure about their preparedness for taking the

course are strongly advised to watch the lectures from week 1, 2, and

3 from

the Autumn

2020 offering, to attempt the quizzes from those weeks, and also

to go through Programming Assignment 1. If they are unable to get a

reasonable grasp of the material or to negotiate the quizzes and

programming assignment, they are advised against taking CS 747.

Evaluation

Grades will be based on three programming assignments, each worth

15 marks; a mid-semester examination worth 20 marks; and an

end-semester examination worth 35 marks. All assessments will be

based on individual work.

The programming assignments must be turned in through Moodle. Late

submissions will not be evaluated; they will receive no marks.

Students auditing the course must score 50 or more marks in the

course to be awarded an "AU" grade.

Moodle

Moodle will be the primary course management system. Marks for the

assessments will be maintained on the class Moodle page; discussion

fora will also be hosted on Moodle. Students who do not have an

account on Moodle for the course must send TA Santhosh Kumar

G. a request by e-mail, specifying the roll number/employee

number for account creation.

Academic Honesty

Students are expected to adhere to the highest standards of

integrity and academic honesty. Academic violations, as detailed

below, will be dealt with strictly, in accordance with the

institute's procedures

and disciplinary

actions for academic malpractice.

Students are expected to work alone on all the programming

assignments and the examinations. While they are free to discuss the

material presented in class with their peers, they must not discuss

the contents of the assessments (neither the questions, nor the

solutions) with classmates (or anybody other than the instructor and

TAs). They must not share code, even if it only pertains to

operations that are perceived not to be relevant to the core

logic of the assessment (for example, file-handling and

plotting). They also may not look at solutions to the given

quiz/assignment or related ones on the Internet. Violations will be

considered acts of dishonesty.

Students are allowed to use resources on the Internet for

programming (say to understand a particular command or a data

structure), and also to understand concepts (so a Wikipedia page or

someone's lecture notes or a textbook can certainly be

consulted). It is also okay to use libraries or code snippets for

portions unrelated to the core logic of the

assignment—typically for operations such as moving data,

network communication, etc. However, students must cite every

resource consulted or used, whatever be the reason, in a file

named references.txt, which must be included in the

submission. Failure to list any resource used will be considered an

academic violation.

Copying or consulting any external sources during the examination

will be treated as cheating.

If in any doubt as to what is legitimate collaboration and what is

not, students must ask the instructor.

Texts and References

Artificial Intelligence: Foundations of Computational Agents, David

L. Poole and Alan K. Mackworth, 2nd edition, Cambridge

University Press,

2017. On-line

version.

Reinforcement Learning: An Introduction, Richard S. Sutton and

Andrew G. Barto, 2nd edition, MIT Press,

2018. On-line

version.

Algorithms for Reinforcement Learning, Csaba Szepesvári,

Morgan & Claypool,

2009. On-line

version.

Selected research papers.

-

On the Likelihood that One Unknown Probability Exceeds Another in View of the Evidence of Two Samples

William R. Thompson, 1933

-

Some Studies in Machine Learning Using the Game of Checkers

Arthur L. Samuel, 1959

-

Probability inequalities for sums of bounded random variables

Wassily Hoeffding, 1963

-

Asymptotically

Efficient Adaptive Allocation Rules

T. L. Lai and Herbert Robbins, 1985

-

Self-Improving Reactive Agents Based On Reinforcement Learning, Planning and Teaching

Long-ji Lin, 1992

-

Practical Issues in Temporal Difference Learning

Gerald Tesauro, 1992

-

Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning

Ronald J. Williams, 1992

-

On the Complexity of Solving Markov Decision Problems

Michael L. Littman, Thomas L. Dean, and Leslie Pack Kaelbling, 1995

-

Average Reward Reinforcement Learning: Foundations, Algorithms, and Empirical Results

Sridhar Mahadevan, 1996

-

An Analysis of Temporal-Difference Learning with Function Approximation

John N. Tsitsiklis and Banjamin Van Roy, 1997

-

Learning to Trade via Direct Reinforcment

John Moody and Matthew Saffell, 2001

-

Finite-time

Analysis of the Multiarmed Bandit Problem

Peter Auer, Nicolò Cesa-Bianchi, and Paul Fischer, 2002

-

Autonomous helicopter flight via reinforcement learning

Andrew Y. Ng, H. Jin Kim, Michael I. Jordan, and Shankar Sastry, 2003

-

Tree-based Batch Mode Reinforcement Learning

Damien Ernst, Pierre Geurts, and Louis Wehenkel, 2005

-

Bandit based Monte-Carlo Planning

Levente Kocsis and Csaba Szepesvári, 2006

-

Evolutionary Function Approximation for Reinforcement Learning

Shimon Whiteson and Peter Stone, 2006

-

Half

Field Offense in RoboCup Soccer: A Multiagent Reinforcement Learning

Case Study

Shivaram Kalyanakrishnan, Yaxin Liu, and Peter Stone, 2007

-

Batch Reinforcement Learning

in a Complex Domain

Shivaram Kalyanakrishnan and Peter Stone, 2007

-

Adaptive Treatment of Epilepsy via Batch-mode Reinforcement Learning

Arthur Guez, Robert D. Vincent, Massimo Avoli, and Joelle Pineau, 2008

-

Self-Optimizing Memory Controllers: A Reinforcement Learning Approach

Engin İpek, Onur Mutlu, José F. Martínez, and Rich Caruana, 2008

-

Reinforcement learning of motor skills with policy gradients

Jan Peters and Stefan Schaal, 2008

-

An Empirical Evaluation of Thompson Sampling

Olivier Chapelle and Lihong Li, 2011

-

The KL-UCB Algorithm for Bounded Stochastic Bandits and Beyond

Aurélien Garivier and Olivier Cappé, 2011

-

On Optimizing Interdependent Skills: A Case Study in Simulated 3D Humanoid Robot Soccer

Daniel Urieli, Patrick MacAlpine, Shivaram Kalyanakrishnan, Yinon Bentor, and Peter Stone, 2011

-

Thompson Sampling: An Asymptotically Optimal Finite-Time Analysis

Emilie Kaufmann, Nathaniel Korda, and Rémi Munos, 2012

-

Human-level control through deep reinforcement learning

Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel

Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas

K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles Beattie, Amir

Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan

Wierstra, Shane Legg, and Demis Hassabis, 2015

-

Randomised Procedures for Initialising and Switching Actions in Policy Iteration

Shivaram Kalyanakrishnan, Neeldhara Misra, and Aditya Gopalan, 2016

-

Mastering the game of Go with deep neural networks and tree search

David Silver, Aja Huang, Chris J. Maddison, Arthur Guez, Laurent Sifre, George van den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, Sander Dieleman, Dominik Grewe, John Nham, Nal Kalchbrenner, Ilya Sutskever, Timothy Lillicrap, Madeleine Leach, Koray Kavukcuoglu, Thore Graepel, and Demis Hassabis, 2016

-

Spatial interactions and optimal forest management on a fire-threatened landscape

Christopher J. Lauer, Claire A. Montgomery, and Thomas G. Dietterich, 2017

-

Mastering the game of Go without human knowledge

David Silver, Julian Schrittwieser, Karen Simonyan, Ioannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, Yutian Chen, Timothy Lillicrap, Fan Hui, Laurent Sifre, George van den Driessche, Thore Graepel, and Demis Hassabis, 2017.

-

-

A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play

David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, Timothy Lillicrap, Karen Simonyan, and Demis Hassabis, 2018.

-

-

Five Proofs of Chernoff's Bound with Applications

Wolfgang Mulzer, 2019

-

Optimising a Real-time Scheduler for Railway Lines using Policy Search

Rohit Prasad, Harshad Khadilkar, Shivaram Kalyanakrishnan, 2020

-

Deep Reinforcement Learning for Autonomous Driving: A Survey

B Ravi Kiran, Ibrahim Sobh, Victor Talpaert, Patrick Mannion, Ahmad A. Al Sallab, Senthil Yogamani, Patrick Pérez, 2021

Communication

This page will serve as the primary source of information regarding

the course, the schedule, and related announcements. The Moodle page

for the course will primarily be used for recording grades.

E-mail to the instructor must contain "[CS747]" in the header.

Schedule

-

July 29: Welcome; Introduction to the course. Lecture 0.

-

August 2: Multi-armed Bandits. Lecture 1.

Summary: Coin-tossing game; Definition of stochastic multi-armed bandit; Definition of algorithm, ε-first and ε-greedy algorithms.

Reading: Sections 2, 2.1, 2.2, 2.3, Sutton and Barto (2018).

Code and data: coins.py, biases.txt, eg.py (two bugs in the code demo-ed in class are fixed in this version).

Practice question: Question 1 in 2016 mid-semester paper.

-

August 5: Multi-armed Bandits. Lecture 2.

Summary: Graph of E[rt] versus t; Definition of regret; Achieving sublinear regret with GLIE sampling; Lai and Robbins's lower bound on regret.

References: Class Note 1; Theorem 1, Lai and Robbins (1985).

Practice question: Question 1 in 2018 mid-semester paper.

-

August 12: Multi-armed Bandits. Lecture 3.

Summary: UCB, KL-UCB, Thompson Sampling algorithms. (Section on concentration bounds in slides deferred.)

Reading: Section 1, Figure 1, Theorem 1, Auer et al. (2002); Sections 1–3, Garivier and Cappé (2011); Chapelle and Li (2011).

References: Thompson (1933), Kaufmann et al. (2012).

Practice question: Part a from Week 3 question in 2021 weekly quizzes.

-

August 16: Multi-armed Bandits. Lecture 4.

Summary: Hoeffding's Inequality, "KL" Inequality; Proof of upper bound on the regret of UCB.

Reading: Wikipedia page on Hoeffding's Inequality; Proof of Theorem 1, Auer et al. (2002).

References: Hoeffding (1963); Mulzer (2019).

Practice question: Question 1 in 2017 mid-semester paper.

-

August 19: Multi-armed Bandits. Lecture 5.

Summary: Interpretation of Thompson Sampling; Survey of bandit formulations.

Reference: Wikipedia page on Bayesian inference.

Practice question: Week 3 question in 2020 weekly quizzes.

-

August 23: Markov Decision Problems. Lecture 6.

Summary: Definition of Markov Decision Problem, policy, and value function; Existence of optimal policy; MDP planning problem; Bellman equations.

Reading: Chapter 3, Sutton and Barto (2018).

Practice question: Question 3 in 2015 mid-semester paper.

-

August 26: Markov Decision Problems. Lecture 7.

Summary: Continuing and episodic tasks; Infinite-discounted, total,

finite-horizon, and average reward structures; Applications of MDPs

References: Section 2.2, Mahadevan (1996); Ng et al. (2003); Lauer et al. (2017).

Practice question: Question 7 in 2018 end-semester paper.

-

August 30: Markov Decision Problems. Lecture 8.

Summary: Banach's Fixed-point Theorem; Bellman optimality operator; Proof of contraction under max norm; Value iteration.

Reading: Appendix A, Szepesvári (2009).

Practice question: Week 5 question in 2021 weekly quizzes.

-

September 2: Markov Decision Problems. Lecture 9.

Summary: Linear programming and its application to MDP planning.

Reference: Littman et al. (1995).

Practice question: Question 4 in 2020 end-semester paper.

-

September 6: Markov Decision Problems. Lecture 10.

Action value function; Policy improvement; Bellman operator; Proof of Policy improvement theorem; Policy Iteration family of algorithms; Effect of history and stochasticity.

Reading: Sections 1 and 2, Kalyanakrishnan et al. (2016).

Reference: Class Note 2.

Practice question: Week 6 question in 2020 weekly quizzes.

-

September 9: Markov Decision Problems. Lecture 11.

Summary: Complexity bounds for MDP planning; Analysis of Howard's PI and Batch-switching PI.

References: Howard (1960); Melekopoglou and Condon (1994); Mansour and

Singh (1999); Hollanders (2012); Hansen et al. (2014); Hollanders et

al. (2014); Gerencsér et al. (2015) Kalyanakrishnan et

al. (2016); Kalyanakrishnan et al. (2016a); Gupta and Kalyanakrishnan

(2017); Taraviya and Kalyanakrishnan (2019); Ashutosh et

al. (2020).

-

September 13: Reinforcement Learning. Lecture 12.

Summary: The Reinforcement Learning problem; Upcoming topics; Applications.

References: Tesauro (1992); Silver et al. (2018); Ng et al. (2003); Mnih et al. (2015); İpek et al. (2008); Guez et al. (2008); Moody and Saffell (2001).

-

September 15: Mid-semester examination.

-

September 23: Reinforcement Learning. Lecture 13.

Summary: Prediction and control problems; Ergodic MDPs; Model-based algorithm for acting optimally in the limit.

Reading: Class Note 3.

Reference: Wikipedia page on Ergodic Markov chains.

Practice question: Question 3d in 2015 mid-semester paper.

-

September 27: Reinforcement Learning. Lecture 14.

Summary: Monte Carlo methods for prediction; On-line implementation of Monte Carlo policy evaluation.

Reading: Sections 5, 5.1, Sutton and Barto (2018).

Reference: Robbins and Monro (1951).

Code: montecarlo.py.

Practice question: Question 2 in 2015 end-semester paper.

-

September 30: Reinforcement Learning. Lecture 15.

Summary: Maximum likelihood estimates and least squares estimates;

Bootstrapping; TD(0) algorithm; Convergence of Monte Carlo and batch

TD(0) algorithms.

Reading: Sections 6, 6.1, 6.2, 6.3, Sutton and Barto (2018).

Practice question: Question 5 in 2020 end-semester paper.

-

October 4: Reinforcement Learning. Lecture 16.

Summary: n-step returns; TD(λ) algorithm; Model-free control: Q-learning, Sarsa, Expected

Sarsa.

Reading: 6.4, 6.5, 6.6, 7, 7.1, Sutton and Barto (2018).

Practice question: Question 1 in 2017 end-semester paper.

-

October 7: Reinforcement Learning. Lecture 17.

Summary: Need for generalisation in practice; Soccer as illustrative example; Linear function approximation and geometric view; Linear TD(λ).

Reading: Sections 9, 9.1, 9.2, 9.3, 9.4, 12, 12.1, 12.2,

Sutton and Barto (2018).

References: Tsitsiklis and Van Roy (1997), Kalyanakrishnan et al. (2007).

Practice question: Question 6 in 2015 end-semester paper.

-

October 11: Reinforcement Learning. Lecture 18.

Summary: Tile coding; Control with function approximation; Tsitsiklis and Van Roy's counterexample.

Reading: 9.5, 9.6, 9.7, 11, 11.1, 11.2, 11.3, Sutton and Barto (2018).

Practice question: Question 3 in 2019 end-semester paper.

-

October 14: Reinforcement Learning. Lecture 19.

Summary: Policy search; Case studies: humanoid robot soccer, railway scheduling.

References: Urieli et al. (2011), Prasad et al. (2020).

Practice question: Week 10 question in 2021 weekly quizzes.

-

October 18: Reinforcement Learning. Lecture 20.

Summary: Policy gradient methods; Policy gradient theorem; REINFORCE; REINFORCE with a baseline; Actor-critic methods.

Reading: Sections 13, 13.1, 13.2, 13.3, 13.4, 13.5, Sutton and Barto (2018).

References: Williams (1992), Moody and Saffell (2001), Peters and Schaal (2008), Silver et al. (2016), Ravi Kiran et al. (2021).

Practice question: Question 6 in 2020 end-semester paper.

-

October 21: Reinforcement Learning. Lecture 21.

Summary: Batch RL; Experience replay; Fitted Q Iteration.

Reading: Kalyanakrishnan and Stone (2007).

References: Lin (1992), Ernst et al. (2005).

Practice question: Week 12 question in 2020 weekly quizzes.

-

October 25: Reinforcement Learning. Lecture 22.

Summary: Dyna-Q; Application of model-based RL to helicopter control.

Reading: Sections 8, 8.1, 8.2, 8.3, 8.4, Sutton and Barto (2018); Ng et al. (2003).

Practice question: Week 4 question in CS 748 2021 weekly quizzes.

-

October 28: Evolution and Learning. Lecture 23.

Summary: Evolution; Evolutionary algorithms; Lamarckian and Darwinian evolution, Baldwin effect; NEAT+Q algorithm.

Reading: Whiteson and Stone (2006).

-

November 1: Search. Lecture 24.

Summary: Search problem instances; Uninformed search; Heuristics and A* search.

Reading: Chapter 3, Poole and Mackworth (2017).

-

November 4: Game trees, Decision-time planning. Lecture 25.

Summary: Minimax search; Rollout policies; UCT algorithm.

Reading: Sections 8, 8.1, 8.8, 8.9, 8.10, 8.11, Sutton and Barto (2018).

References: Samuel (1959); Kocsis and Szepesvári (2006).

-

November 7: AlphaGo. Lecture 26.

Summary: AlphaGo; Review of course.

Reading: Silver et al. (2016).

References: Silver et al. (2017), Silver et al. (2018).

-

November 17: End-semester examination.

Assignments